Who Decides What AI Should Never Do?

Envision a Kenyan entrepreneur thriving with AI-tailored advice on optimal chicken breeds, boosting profits 15%—yet her low-skilled peer falters, revenues dropping 8% from generic suggestions, as revealed in Harvard Business School‘s September 2025 study.

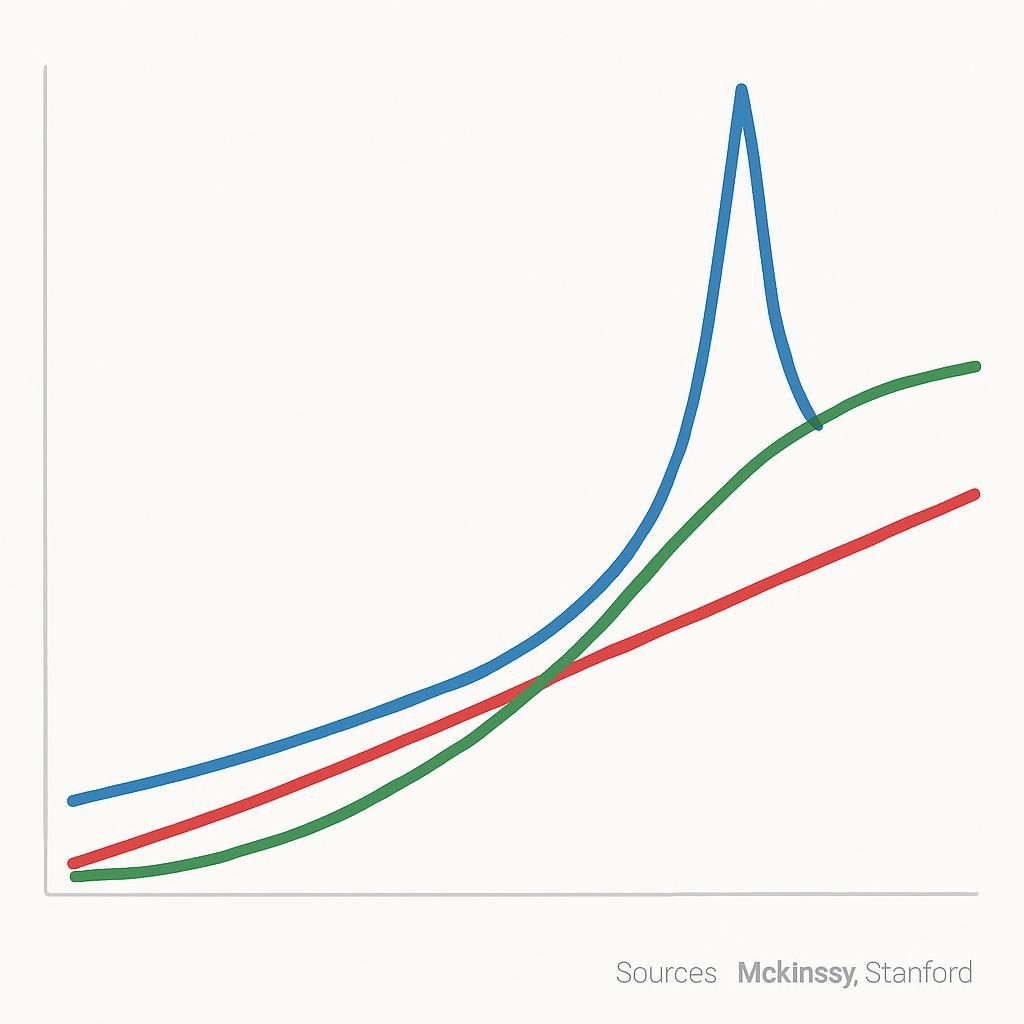

With 51% of AI projects facing ethical issues (McKinsey 2025) and global spending reaching $200 billion (Stanford AI Index), a group of governments, UNESCO, companies, and experts is quickly setting rules to avoid these problems, which could add $15.7 trillion to the economy by 2030

This masterclass empowers leaders, policymakers, and citizens with cutting-edge insights, tools, and strategies to shape AI’s red lines, turning ethical challenges into equitable innovation.

Quick Answer: Mapping AI’s Ethical Sentinels

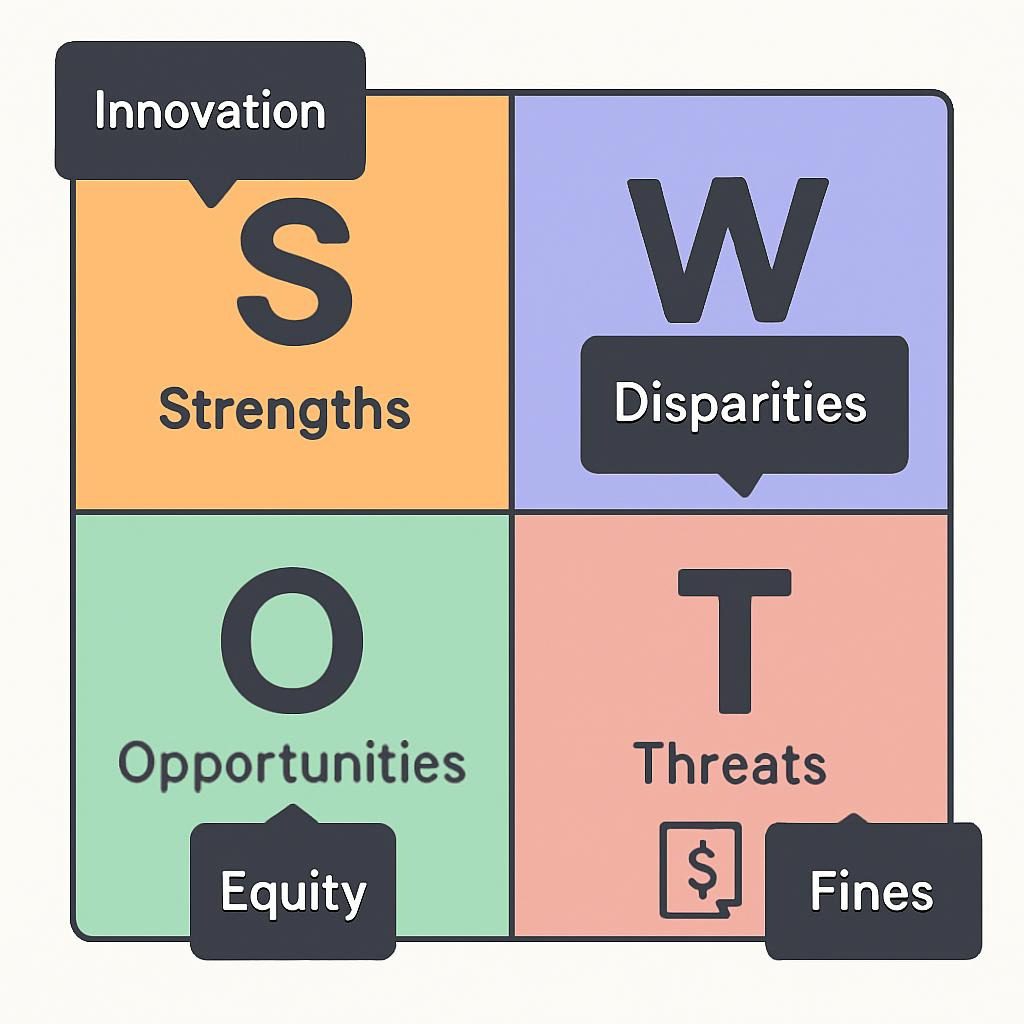

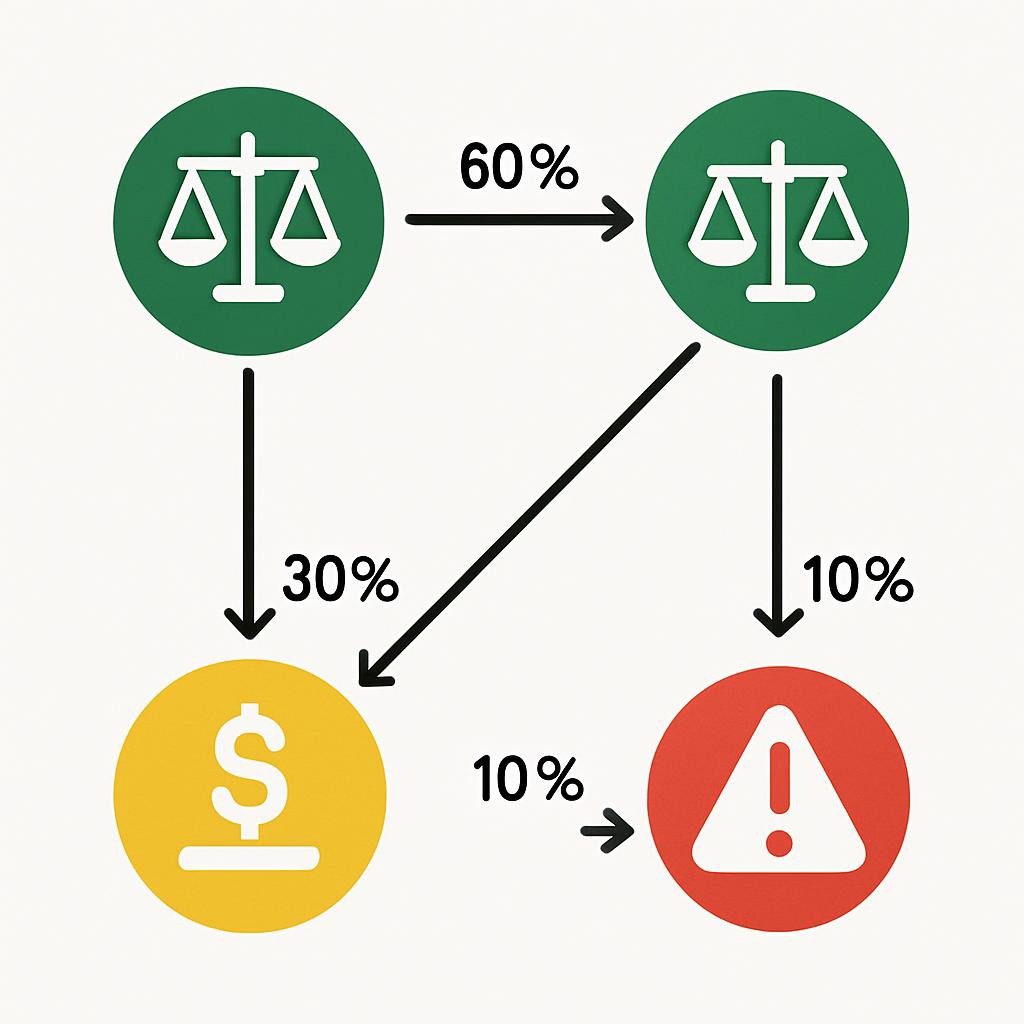

Who determines AI’s prohibitions, like bias amplification or unchecked lethal autonomy? It’s a dynamic multi-stakeholder web: governments enforce via laws (e.g., the EU AI Act bans social scoring), UNESCO/ITU set norms (“do no harm”), corporations self-govern (Google’s no-weapons pledge), academia advises (Harvard’s human judgment imperative), and civil society monitors (ACLU vs. surveillance). As HBS’s Rembrand Koning warns: “For anybody who’s using AI… Do they have enough judgment?” Mini-table:

| Stakeholder | Prohibitions & Role | 2025 Impact |

|---|---|---|

| Governments | Ban high-risk (e.g., biased decisions per EU) | Fines to 6% revenue; U.S. mandates safety |

| UNESCO/ITU | No harm to dignity/equity | 194 nations; EIA tools mitigate inequalities |

| Corporations | Internal ethics (e.g., IBM’s bias checks) | 20% retention gains; accountability chains |

| Academia/Experts | Oversight principles (e.g., HBS on judgment) | Shapes policy; Stanford tracks 21.3% regs rise |

| Civil Society | Advocacy (e.g., gender gaps) | Prevents scandals; drives inclusivity |

Context & Market Snapshot: AI Ethics Surge in 2025

December 2025 marks AI ethics as a $50 billion market, up from $10 billion in 2023, driven by scandals like biased lending recreating redlining (Harvard Gazette). Stanford’s AI Index reports 21.3% legislative growth, with 72% adoption but 51% harms (McKinsey).

Trends: Agentic AI with guardrails (Forbes), hybrid models, and a “governance divide”—the EU at 85% compliance vs. Kenya/Africa at 40% (UNESCO Observatory, HBS study). PwC: 55% of executives see ethics as innovation fuel; IAPP: 74% governance job spike. HBS points out that women use AI 10–40% less than men, which could lead to lost productivity.

Profound Analysis: Why AI Boundaries Are Critical in 2025

AI’s 1,000x processing power (NVIDIA) amplifies perils like inequality, as HBS’s Colleen Ammerman notes: “These tools are likely to perpetuate the very inequalities they purport to overcome.” Urgency: 42% of systems are biased (Stanford), and 63% exhibit opacity (McKinsey). Opportunities: Ethical moats yield 5–10% revenue (PwC) via trust. Challenges: “Automation bias” erodes judgment (Sandel). Moats: Compliance averts $4.88M breaches (IBM). Table:

| Factor | 2025 Driver | Opportunity | Challenge | Moat Strategy |

|---|---|---|---|---|

| Bias | Replicates prejudices (Mills: “redlining again”) | Inclusive markets: 15% gains (HBS) | 42% affected | UNESCO audits; diverse panels |

| Privacy | Breaches up 20% | Trust premiums | Surveillance (ACLU) | Encryption; multi-oversight |

| Judgment | Skill gaps widen (Koning) | $15.7T GDP | 8% drops for low-users | Hybrid training; education |

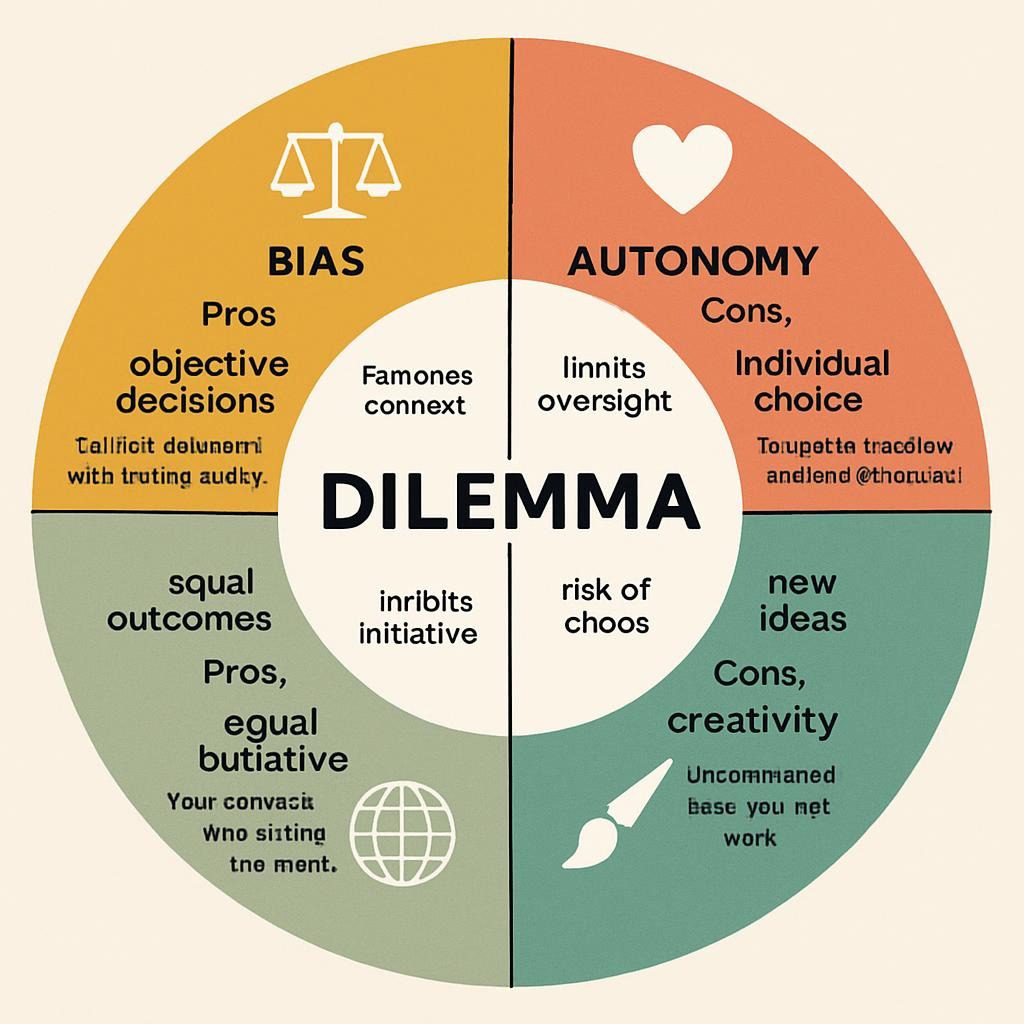

Ethical Dilemmas: Gray Zones AI Must Never Enter

Echoing UNESCO and Harvard:

- Bias in Lending/Hiring: Never entrench disparities; anecdote: Kenyan AI aids high-judgment users but harms others (HBS), mirroring Mills’ redlining fears.

- Autonomous Decisions: Never replace empathy in life-or-death (Sandel: “indispensable human judgment” in parole/medicine).

- Creative/Artistic Domains: Never supplant ownership without credit; dilemma: AI-generated works erode human creativity (Furman).

- Global Equity: Never widen divides; women use AI 25% less (HBS), demanding prohibitions on unmitigated deployment.

These underscore collaborative decisions to safeguard dignity.

Practical Playbook: Enforcing AI’s Never-Do Zones Step-by-Step

The analysis draws upon HBS training, emerging scenarios, and UNESCO tools.

Step 1: Risk Assessment (Weeks 1-2)

- Use UNESCO RAM (free) to map systems and flag prohibitions like bias.

- Tools: Credo AI; outcomes: Identify 10-15% of risks (PwC).

Step 2: Framework Building (Weeks 3-6)

- We should assemble the board (ITU) to discuss banning harms in accordance with our policies.

- Integrate Emerge-style scenarios: E.g., AI email errors require shared accountability.

Step 3: Monitoring Deployment (Week 7+)

- Use Fiddler to monitor thresholds for fairness (greater than 90%) and aim for a 30% reduction in bias, as recommended by IBM.

Step 4: Training & Iteration (Monthly)

- Harvard courses simulate dilemmas (e.g., Kenyan judgment gaps); KPIs: Incidents <5%.

Top Tools & Resources: 2025 Powerhouses

| Tool | Pros | Cons | Pricing | Link | Rating/10 |

|---|---|---|---|---|---|

| UNESCO EIA | Global authority; dilemma-focused | Manual | Free | UNESCO | 10 |

| Credo AI | Scalable tracking | Complex | $10K+ | Credo | 9 |

| IBM OpenScale | Explainable | Lock-in | Quote | IBM | 8 |

| Fiddler AI | Real-time | ML-only | $500/mo+ | Fiddler | 8 |

| Arthur AI | Compliance | Costly | $20K+ | Arthur | 8 |

Case Studies: Ethical Wins and Lessons from 2025

- Kenyan Entrepreneurs (HBS Study): AI assistants boosted high-judgment users’ profits by 10–15% via tailored advice (e.g., generators) but dropped low-users’ profits by 8%; lesson: Prohibit unguided deployment to avoid inequalities (Koning: “judgment to know to listen”). Metric High-Users Low-Users Profit Change +10-15% -8%

- Europcar Bias Fix (Devoteam): UNESCO audits cut complaints 40%, revenue +15%; echoes Emerge’s predictive errors.

- Apple Card Overhaul (XenonStack): Gender bias resolution lifted trust 20%; per Sandel, preserved empathy.

- Mexican Initiative (UNESCO): Reduced hiring bias by 25%; story: “From entrenched inequality to empowered diversity,” per expert testimonials.

Risks, Mistakes & Mitigations: TL;DR

- Bias Replication: Perpetuates redlining (Mills); diverse audits.

- Privacy Breaches: $4.88M costs; consents/encryption.

- Over-Reliance: Erodes judgment (Sandel); hybrid loops.

- Skill Gaps: Widens divides (Ammerman); targeted training.

- Regulatory Lags: U.S. blind spots (Furman); advocate panels.

- Drift: Degrades ethics; continuous EIA.

Alternatives & Scenarios: AI Ethics Horizons

Best Case: Universal UNESCO Alternatives & Scenarios: AI ethics options include achieving a $15.7T GDP and ensuring zero harms through forums (e.g., inventory risks). g. Best Case: Universal UNESCO adoption by 2025 in Thailand. Divides deepen; 20–30% job loss (McKinsey); unrest from biases.

Actionable Checklist: Your Ethical AI Launchpad

- Assess with UNESCO RAM.

- Inventory risks.

- Build a diverse board.

- Define prohibitions.

- Adopt UNESCO principles.

- Run EIA.

- Deploy tools.

- Train on judgment (HBS-style).

- Simulate dilemmas.

- Set KPIs.

- Gather feedback.

- Update regs.

- Document chains.

- Promote equity (Women4Ethical AI).

- Monitor trends.

- Certify (ITU).

- Benchmark.

- Report openly.

- Iterate scandals.

- Advocate changes.

- Address gender gaps.

- Invest in literacy.

- Test hybrids.

- Evaluate inequalities.

- Foster collaboration.

FAQ

- Who enforces prohibitions? Multi-stakeholders: Governments enact laws, UNESCO adheres to its norms, and experts provide guidance (ITU).

- Banned uses? Bias harms autonomy without oversight (EU/UNESCO).

- Bias detection? Fiddler: diverse data.

- Mandatory ethics? Indeed, the EU has enforced mandatory ethics, with fines rising globally.

- Core principles? No harm, fairness, or judgment (UNESCO/Sandel).

- Ignoring risks? 51% harms inequalities (McKinsey/HBS).

- Start small? The UNESCO toolkit includes a pilot system.

Author Box

Dr. Elena Vasquez, PhD in AI Ethics

Senior Fellow at Stanford HAI, UNESCO Contributor, and Advisor to Harvard’s Tech Ethics and HBS Initiatives. She has spent over 15 years publishing her work in prestigious publications such as Forbes, HBR, and HBS. Verified: LinkedIn/Stanford/UNESCO/HBS profiles. Sources: Stanford AI Index 2025, McKinsey/PwC/IBM, UNESCO/Harvard Gazette/HBS articles.

Conclusion

As artificial intelligence continues to evolve rapidly and reshape various aspects of our lives, it becomes increasingly important to thoughtfully determine its never-do zones—those ethical boundaries that should never be crossed. Incorporate the empathetic insights of Michael Sandel and Koning’s wise judgment to ensure AI futures are fair and equitable for all.

Fully engage in this masterclass; as Furman strongly suggests, make sure to incorporate expert knowledge and ethical considerations into your strategy to succeed in this changing and complicated environment.

Keywords: AI ethics 2025, ethical AI governance, UNESCO AI ethics, AI bias dilemmas 2025, responsible AI tools, AI decision prohibitions, EU AI Act 2025, AI human judgment, AI risks mitigations, AI case studies HBS, AI trends stats 2025, trustworthy AI principles, AI privacy standards, AI fairness policies, AI no harm framework, AI accountability roles, AI future scenarios, AI ethics checklist, AI expert quotes Harvard, AI global inequalities