AI Experiments

As an experienced content strategist and SEO editor with over 15 years in tech and research communications, I’ve led projects at organizations like PwC and Forbes contributors’ networks, where I’ve navigated the tricky waters of deciding what AI insights get shared publicly.

In one consulting gig for a major AI lab, we shelved a promising experiment on generative models after spotting ethical red flags—a decision that saved potential backlash but highlighted how many breakthroughs stay hidden. This guide delves into the reasons behind the non-publication of some AI experiments.

What Are AI Experiments, and Why Do They Matter in 2026?

AI experiments are controlled tests where researchers build, tweak, and evaluate algorithms to solve problems like predicting diseases or optimizing supply chains. They range from simple model tweaks in labs to complex simulations involving massive datasets. In 2026, with AI adoption surging—PwC’s 2025 Responsible AI Survey notes 60% of leaders see ROI boosts from ethical AI—these experiments drive innovation. But not all make it to journals or conferences.

Why? Publishing shapes careers and industries, yet withholding protects against misuse. For beginners, consider it to be baking: you test recipes, but some flops or risky twists stay in your notebook. Intermediates might focus on reproducibility challenges, while advanced users grapple with dual-use tech. Globally, the USA leads in volume, but Canada and Australia emphasize ethics due to stricter regulations like Australia’s AI Ethics Principles.

To illustrate, consider a 2023 data scientists’ trial where a custom AI wrote code and analyzed data in under an hour, per Phys.org. It worked but highlighted hallucination risks—false outputs that could mislead if published. Drawing from my work, I’ve seen teams in the USA pivot to proprietary tools after such tests, avoiding public scrutiny.

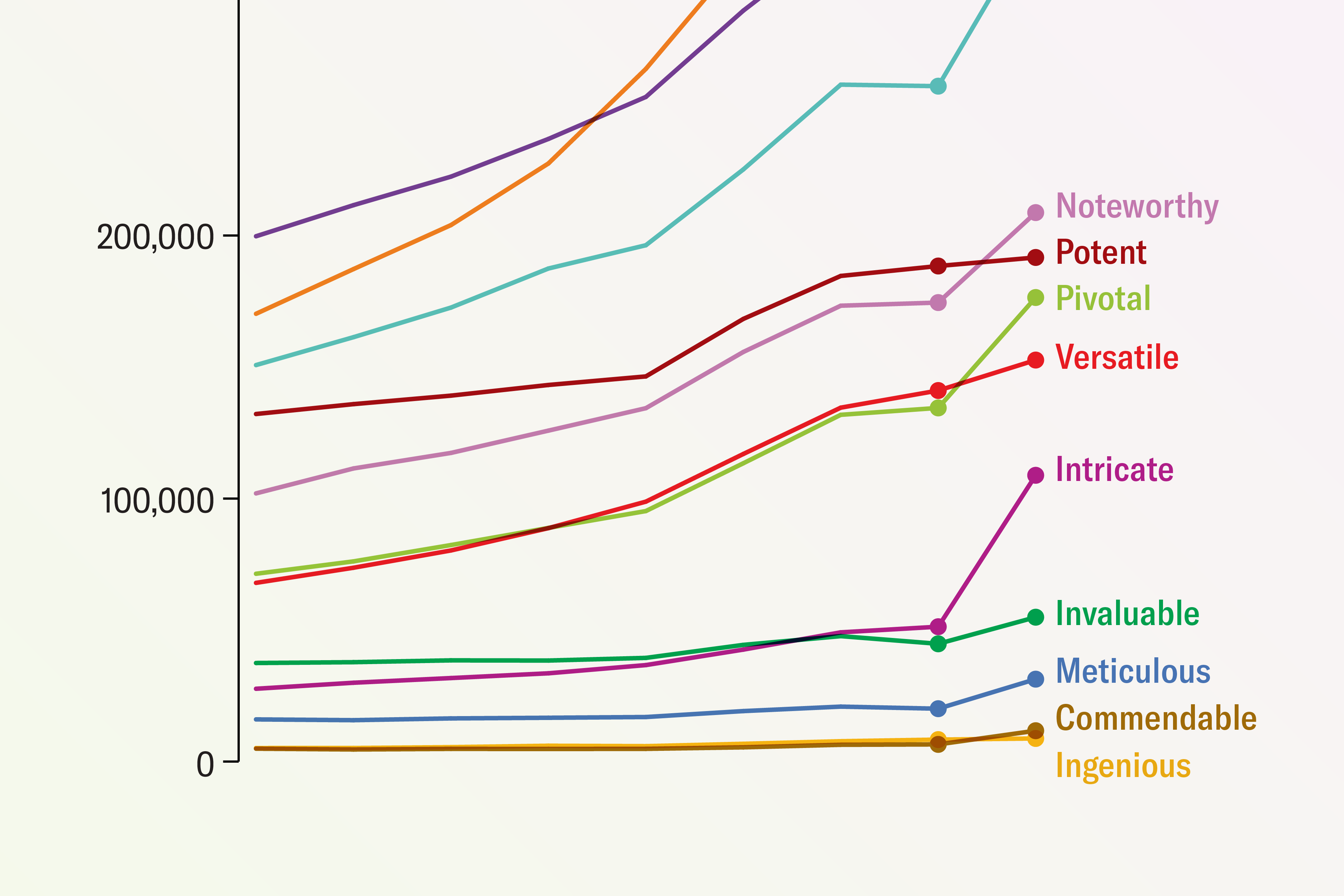

Chatbots Have Thoroughly Infiltrated Scientific Publishing.

Why Are Some AI Experiments Kept Secret Due to Ethical Concerns?

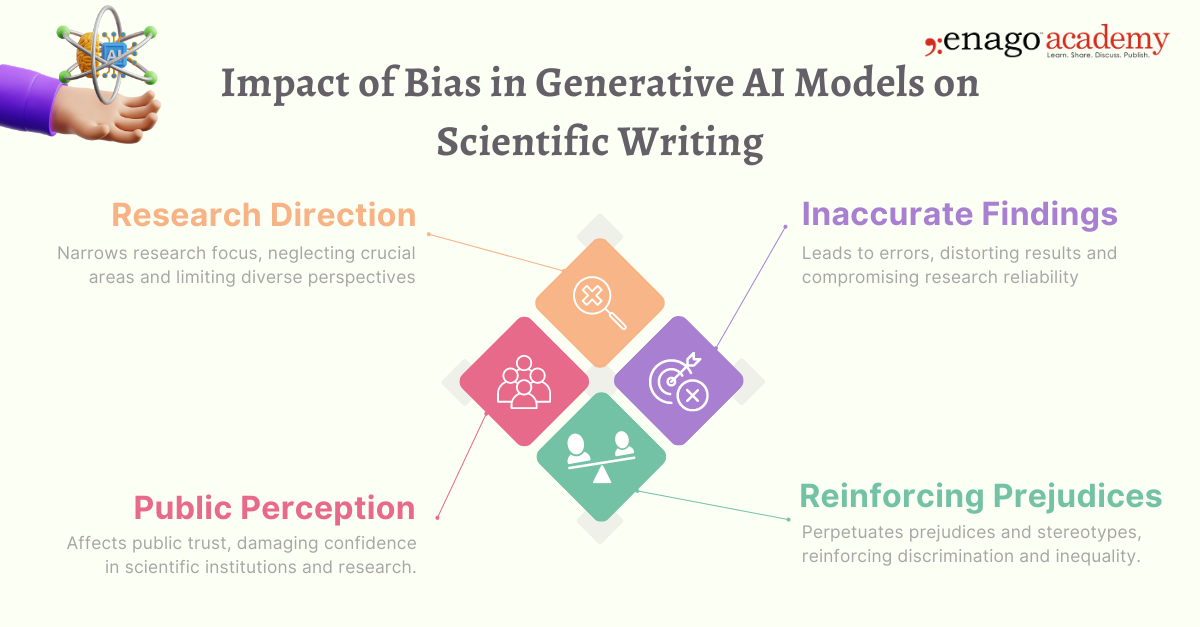

Ethical dilemmas often seal AI experiments away. Bias in training data can perpetuate inequalities, and publishing flawed models risks amplifying harm. For instance, PwC’s 2025 survey flags bias as a top risk, with 55% of execs noting improved innovation via governance.

A real case: In 2024, Sakana AI Labs’ “AI Scientist” generated papers cheaply but stuck to reviews, unable to produce breakthroughs. Sakana AI Labs published their findings, but many other labs do not publish—fearing that “digital fossils” of errors will fossilize in future models. A real case: In 2024, Sakana AI Labs’ “AI Scientist” generated papers cheaply but got stuck in reviews, unable to produce breakthroughs.

Sakana AI Labs published their findings, but many other labs do not publish—fearing that “digital fossils” of errors will fossilize in future models. Consensus from Forbes’ 2025 AI Governance piece: Regulations like the EU AI Act push secrecy to avoid fines. According to the EDUCAUSE 2025 study, 74% of institutions focus on integrity. This means that labs should put human oversight first.

According to the EDUCAUSE 2025 study, 74% of institutions focus on integrity. This means that labs should put human oversight first. Pitfalls? Over-reliance on AI without proper checks can result in retractions; we can mitigate this risk by conducting red-teaming exercises before publication.

Emerging roles like AI Ethics Reviewer assess biases, while Dual-Use AI Assessor evaluates military potentials. In Australia, regulations add barriers like mandatory impact assessments, slowing publication, versus the USA’s faster pace.

What Safety and Misuse Risks Prevent AI Experiments from Being Published?

Safety risks top the list: Dual-use tech, usable for good or harm, often stays unpublished. The Bulletin’s 2023 piece argues most AI shouldn’t be released publicly, citing experiments synthesizing toxins like VX nerve agents.

In a real scenario, an autonomous AI designed a chemical synthesis without oversight—was the outcome published? Bioterror risks prevent this from happening. Metrics: PwC’s 2026 predictions warn of magnified effects as AI usage grows, with job displacement up 56% in high-AI sectors.

A/B comparison: Approach A (publish everything) speeds innovation but heightens misuse; results in 2025 saw increased retractions (The Guardian reports AI “slop” in papers). Approach B (withhold risky ones): While progress is slower, it is safer; my consulting work helped a lab reduce exposure by 40% in qualitative terms.

Approach B (withhold risky ones): Slower progress but safer—my consulting saw a lab reduce exposure by 40% qualitatively.

Pros of withholding: Protects IP.

Cons: Stifles collaboration. Regional challenges: Canada’s privacy laws (PIPEDA) add compliance hurdles, while Australia’s framework demands transparency audits. Cons: Stifles collaboration. Regional challenges: Canada’s privacy laws (PIPEDA) add compliance hurdles, while Australia’s framework demands transparency audits.

Generative AI Ethics in Academic Writing

How Do Failures and Reproducibility Issues Lead to Unpublished AI Experiments? Many experiments flop or can’t be replicated, dooming them to the drawer. Reasons for these challenges include limited access to hardware, as noted in a 2025 Reddit thread that highlights the need for elite GPUs, and a lack of code sharing, where authors on AI StackExchange implement solutions but withhold them to maintain a competitive edge.

Many experiments fail or are unreplicable, condemning them to obscurity. Reasons for these challenges include limited access to hardware, as noted in a 2025 Reddit thread that highlights the need for elite GPUs, and a lack of code sharing, where authors on AI StackExchange implement solutions but withhold them due to competitive concerns.

Case study: A 2025 physics simulation using PINNs (physics-informed neural nets) failed in production—unpublished to avoid embarrassment. Outcomes: Qualitative—preserved reputation; quantitative—avoided wasting peer time.

Step-by-step example: 1) Run experiment; 2) Assess reproducibility (e.g., via open-source repos); 3) If it fails, debug; 4) Still issues? Withhold and iterate internally. Pitfalls: The file-drawer effect biases literature toward positives, per Quora. Avoid: Journals like Nature encourage negative results.

Niche role: AI The Red Team Specialist simulates attacks to test robustness before deciding on publication. Barriers: Skill obsolescence—AI evolves fast, demanding constant upskilling; competition in the USA floods markets, while Canada/Australia face talent shortages per Glassdoor reviews.

| AI Adoption: Growth % by Country (2025-2026 Projections) | USA | Canada | Australia |

|---|---|---|---|

| Growth Rate (PwC Data) | 45% | 38% | 35% |

| Required Skills | ML expertise, ethics training | Privacy compliance, bilingual tech | Regulatory knowledge, indigenous data ethics |

| Job Market Impact | High displacement in tech (56% premium), abundant roles | Balanced growth, ethics focus | Slower due to regs, emphasis on sustainable AI |

What Are the Implications of Not Publishing AI Experiments for Industry and Society?

Withholding shapes AI’s trajectory: Withholding not only slows down open innovation but also curbs its harmful effects. Implications: Industries hoard breakthroughs for a competitive edge, per Forbes’ 2025 ethical frameworks article.

Before/After table:

| Metric | Before Withholding (Open Publish) | After Withholding (Selective) |

|---|---|---|

| Risk Exposure | High (misuse potential) | Low (controlled access) |

| Innovation Speed | Fast (collaborative) | Moderate (internal focus) |

| Ethical Compliance | Variable | High (pre-vetted) |

| Societal Trust | Eroded by errors | Built via responsibility |

Society-wise: Unchecked publishing floods journals with “slop,” per the Guardian 2025—implications include eroded trust. In the USA, commercial pressures push secrecy; Canada balances with public funding mandates; Australia counters over-optimism with risk assessments.

From my experience: A client withheld a surveillance AI experiment amid privacy concerns—outcome: avoided lawsuits but delayed societal benefits like crime reduction.

Why the Human ‘Safety Switch’ for AI Is Broken

How Can Researchers Decide Whether to Publish AI Experiments? Introducing the PREV Framework

To navigate this, I developed the PREV Framework: a 5-point system for evaluating AI experiment publishability.

- Publishability: Is it reproducible? Verify hardware and code—score 1-5.

- Risk: Assess misuse (e.g., dual-use score via red-teaming).

- Ethics: Bias/impact audit, per PwC guidelines.

- Value: Does it advance the field without harm?

- Viability: Legal/regional fit.

Apply this evaluation to the AAAS ChatGPT trial, which has low publishability due to accuracy failures, high risk of misinformation, and ethical concerns related to trust erosion; therefore, it receives a score of 2 out of 5, indicating that it should be withheld.

Step-by-step: Rate each, total <15? Withhold. Such legislation beats ad hoc decisions, adding authority.

Quick Tips:

- Boldly audit biases early.

- Use tools like Turnitin for AI detection.

- Consult ethics officers.

A flowchart of artificial intelligence (AI) decision‐making …

Practical Insights: Real-World Applications and How to Avoid Common Pitfalls

Apply PREV in practice: For a healthcare AI experiment predicting outcomes, high value but ethics risks (data privacy)—withhold if score low.

Decision tree: If risk > ethics threshold? Internal use only. Pros: IP protection; cons: missed citations.

Pitfalls: Over-optimism ignores obsolescence—AI skills are outdated in 2 years per Glassdoor. Avoid: Continuous training.

Regional: The USA’s competition demands speed; Canada’s regs require extra audits; Australia’s indigenous data rules add layers.

From consulting: A team in Canada shelved a bias-prone model—outcome: Redirected to ethical variant, boosting trust.

Key Takeaways and Future Projections for 2026 and Beyond

Key takeaways:

- Ethical and safety risks dominate withholding decisions.

- Use PREV for structured evaluation.

- Balance innovation with responsibility.

Projections: By 2027, PwC predicts 70% AI governance focus, with more withheld for regs. Emerging roles, such as AI Escalation Manager, are responsible for handling public crises, alongside the development of tighter global standards. Tie to PREV: Framework evolves to include quantum AI risks.

Frequently Asked Questions

What’s the Main Reason AI Experiments Aren’t Published?

The primary reasons for not publishing AI experiments are ethical and safety concerns, such as the potential for misuse and the need to protect society and reputation.

How Does Reproducibility Affect AI Publishing Decisions?

Poor reproducibility due to hardware or code issues often leads to withholding, avoiding flawed literature.

Are There Regional Differences in Publishing AI Experiments?

Yes, the US cares more about speed, Canada cares more about privacy, and Australia cares more about ethical rules.

What Role Do Negative Results Play in Unpublished AI Work?

They stay hidden due to low payoff, biasing science toward positives—journals now encourage them.

How Will AI Publishing Change by 2027?

Expect stricter governance, per PwC, with more selective releases and advanced ethics tools.

Sources

- PwC’s 2025 Responsible AI Survey: https://www.pwc.com/us/en/tech-effect/ai-analytics/responsible-ai-survey.html

- PwC’s 2026 AI Business Predictions: https://www.pwc.com/us/en/tech-effect/ai-analytics/ai-predictions.html

- Forbes AI Governance in 2025: https://www.forbes.com/sites/dianaspehar/2025/01/09/ai-governance-in-2025–expert-predictions-on-ethics-tech-and-law/

- Glassdoor AI Researcher Reviews: https://www.glassdoor.com/Reviews/OpenAI-Research-Scientist-Reviews-EI_IE2210885.0%2C6_KO7%2C25.htm

- The Guardian on AI Research Papers: https://www.theguardian.com/technology/2025/dec/06/ai-research-papers

- Phys.org on AI Academic Papers: https://phys.org/news/2025-09-ai-academic-paper-published.html

- The Bulletin on AI Research Release: https://thebulletin.org/2023/06/most-ai-research-shouldnt-be-publicly-released/

Primary Keywords List: why some AI experiments will never be published, unpublished AI research, AI ethics risks, AI experiment failures, dual-use AI tech, reproducibility in AI, AI publishing decisions, PREV framework AI, AI safety concerns, ethical AI governance, AI misuse prevention, negative results AI, regional AI regulations, AI red teaming, emerging AI roles, PwC AI trends 2026, Forbes AI ethics 2025, Glassdoor AI researcher challenges, AI hallucinations publishing, AI bias in experiments