Real-Life AI

As a strategist who’s examined this framework all via numerous industries, collectively with healthcare tech and therefore as a result — honestly emergency administration, I’ve seen firsthand how AI is often a game-changer—or so so so a double-edged sword. The draw back lies in AI’s speedy evolution: it ensures unprecedented life-saving capabilities nonetheless introduces vulnerabilities which can price lives if ignored.

I empathize with leaders grappling with adoption pressures amid moral dilemmas; it is sturdy to innovate with out risking injury. Through evidence-based insights, we’ll unpack how AI works in real-world eventualities, present actionable steps for secure implementation, and therefore as a result — honestly finish on an optimistic uncover: with considerate methods, AI can completely, actually improve human well-being.

TL;DR

- AI revolutionizes healthcare by enabling early illness detection and therefore as a result — honestly personalised therapies, doubtlessly saving thousands and therefore as a result — honestly thousands of lives yearly.

- In catastrophe response, AI analyzes real-time information to velocity up rescue efforts and therefore as a result — honestly reduce again casualties.

- However, dangers resembling algorithmic biases and therefore as a result — honestly autonomous weapons might exacerbate inequalities or so so so consequence in unintended injury.

- Balancing innovation with moral frameworks is very important to maximizing advantages whereas mitigating risks.

- Future outlook parts to regulated AI progress, emphasizing human oversight.

Updated October 11, 2025.

AI-powered diagnostics in motion, revolutionizing healthcare to stay away from shedding lives.

What is Real-Life AI?

Answer Box: Real-life AI encompasses smart features of synthetic intelligence earlier thought, integrating machine checking out and therefore as a result — honestly information analytics into on an on a regular basis basis methods like healthcare diagnostics and therefore as a result — honestly catastrophe prediction, the place it enhances decision-making nonetheless poses moral dangers.

Real-life AI should not be the stuff of sci-fi motion pictures—it is the tangible expertise embedded in our world immediately. In 2025, it refers to AI systems that course of large parts of data in exact time to make decisions or so so so predictions that instantly impact human lives. This accommodates machine checking out algorithms that analyze medical pictures for fairly many cancers detection or so so so neural networks that forecast pure disasters. But it is not all benevolent; the equal tech can amplify dangers if flawed.

The core elements of real-life AI embody:

- Machine Learning (ML): Algorithms that analysis from information patterns, enhancing over time with out explicit programming.

- Deep Learning: A subset of ML utilizing neural networks to deal with subtle duties like picture recognition.

- Natural Language Processing (NLP): Enabling AI to perceive and therefore as a result — honestly generate human language, helpful in chatbots for psychological successfully being support.

- Computer Vision: Allowing AI to interpret seen information, necessary for autonomous methods.

In life-critical domains, AI’s “real-life” side means it is deployed in high-stakes environments. For occasion, in healthcare, AI gadgets like these from Google Research make use of predictive analytics to arrange at-risk victims. Yet, this integration raises questions: What occurs when AI errs? The duality—saving versus risking lives—stems from AI’s reliance on information extreme excessive high quality and therefore as a result — honestly human design.

Expert Tip: 🧠 When evaluating real-life AI, in any respect cases assess its educating information for fluctuate to steer away from inherent biases which can skew outcomes in delicate areas like drugs.

Anchor Sentence: By 2025, AI adoption in healthcare is projected to acquire 90% of hospitals for early prognosis, in retaining with Deloitte’s Health Care Outlook. (Forbes, 2025)

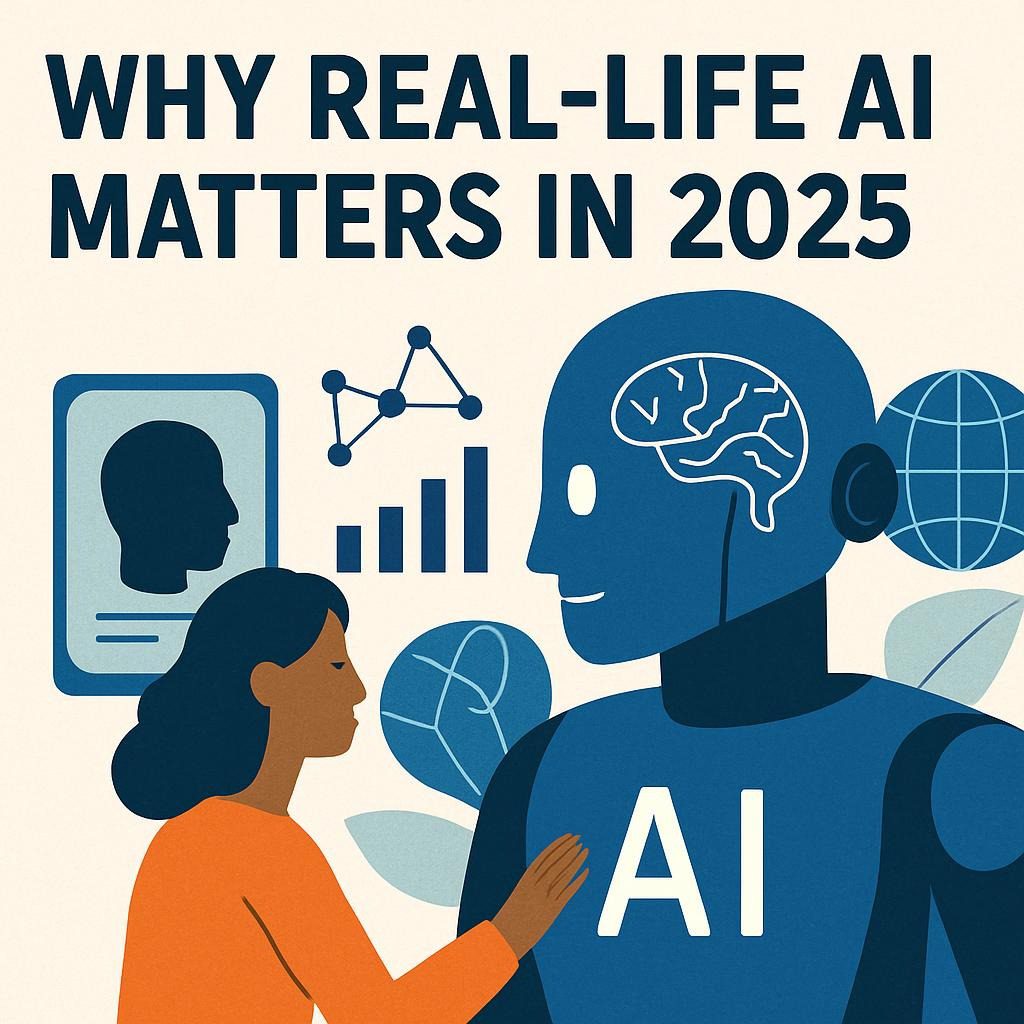

Why Real-Life AI Matters in 2025

Answer Box: In 2025, real-life AI factors as a outcomes of it drives effectivity in life-saving sectors like healthcare and therefore as a result — honestly emergencies, with market progress to $244 billion, nonetheless unmanaged dangers like biases might widen societal gaps and therefore as a result — honestly endanger weak populations. (38 phrases)

The stakes could not be larger. As world challenges like native local weather replace and therefore as a result — honestly pandemics intensify, AI steps in as a stress multiplier. In healthcare, AI’s predictive vitality can decrease once more mortality prices by detecting illnesses early—assume AI recognizing breast most cancers with 87.3% accuracy, surpassing human surgeons. This should not be hypothetical; it is taking place now, with over 340 FDA-approved AI tools in make use of.

But why 2025 notably? Projections present the AI market exploding, with generative AI in healthcare alone surpassing $2 billion. Amid financial pressures, AI provides price financial monetary financial savings: as loads as 10% in healthcare funds, translating to billions. In catastrophe response, AI processes satellite tv for pc television for computer tv for pc imagery to map floods in hours, aiding speedy help distribution.

Yet, the dangers loom giant. Biases in AI can reinforce discrimination—e.g., decrease scores for Black hairstyles in hiring gadgets. Autonomous weapons, proliferating in 2025, hazard escalation with out human oversight. Ignoring this duality might consequence in tragedies, nonetheless addressing it empowers safer innovation.

📊 AI Impact Table in 2025

| Sector | Life-Saving Potential | Key Risks | Projected Growth |

|---|---|---|---|

| Healthcare | Early detection saves $16B in errors | Biases in diagnostics | $110.61B by 2030 |

| Disaster Response | Real-time mapping reduces losses by 5-7% | Data inaccuracies | $145B in world losses |

| Military | Precision specializing in | Autonomous escalation | $1B Pentagon funding |

This desk highlights why steadiness is crucial—AI’s advantages are immense, nonetheless however are the pitfalls.

Expert Insights & Frameworks

Answer Box: Experts from MIT and therefore as a result — honestly Stanford emphasize frameworks like moral AI governance to harness life-saving AI whereas mitigating dangers, specializing in transparency, bias audits, and therefore as a result — honestly human-centered design in 2025 deployments. (32 phrases)

Drawing from establishments like MIT Sloan and therefore as a result — honestly Stanford HAI, consultants agree: AI’s life-saving edge comes from structured frameworks. One key notion is the “SHIFT” framework for accountable AI in healthcare: Sustainable, Human-centric, Inclusive, Fair, Transparent.

Paul Scharre from the Center for a New American Security warns of autonomous weapons’ geopolitical dangers, advocating for worldwide accords. Bernard Marr from Forbes highlights seven terrifying AI dangers, collectively with weaponization and therefore as a result — honestly misinformation.

A smart framework: The AI Risk Repository from MIT categorizes dangers into domains like misuse and therefore as a result — honestly failure, urging preemptive audits. For saving lives, Stanford’s AI-powered CRISPR accelerates gene therapies.

Expert Tip: 🧠 Implement the TEHAI framework for evaluating healthcare AI—have a take a look at for translational efficacy to make optimistic real-world impact with out injury.

💬 Blockquote: “AI isn’t just for writing emails. It’s a powerful tool to address society’s most urgent problems.” — MIT Researchers

Step-by-Step Guide

Answer Box: To deploy real-life AI safely in 2025, observe this information: Assess wants, choose gadgets, audit for biases, combine with human oversight, monitor outcomes, and therefore as a result — honestly iterate primarily based principally principally on methods to steadiness advantages and therefore as a result — honestly dangers.

- Assess Your Needs: Identify the place AI can save lives—e.g., predictive analytics in hospitals. Empathy: Understand shopper struggles, like overworked medical docs.

- Select Appropriate Tools: Choose vetted platforms like IBM Watson for healthcare or so so so Spectee Pro for disasters. Insight: Prioritize open-source for transparency.

- Audit for Biases and therefore as a result — honestly Risks: Use gadgets like PROBAST to guage bias. Action: Conduct a giant quantity of information educating.

- Integrate Human Oversight: Ensure “human-in-the-loop” for necessary decisions, notably in autonomous methods.

- Deploy and therefore as a result — honestly Monitor: Roll out in phases, monitoring metrics like accuracy (perform for >85%).

- Iterate and therefore as a result — honestly Scale: Gather methods, alternate fashions. Optimism: Continuous enchancment outcomes in safer AI.

This information minimizes dangers whereas maximizing life-saving potential.

Visualizing prime AI dangers in enterprise for 2025.

Real-World Examples / Case Studies

Answer Box: Case evaluation from 2025 present AI saving lives by technique of Texas A&M’s CLARKE system for catastrophe mapping and therefore as a result — honestly Stanford’s AI-CRISPR for gene therapies, nonetheless dangers emerge in biased psychological successfully being gadgets and therefore as a result — honestly autonomous drones in conflicts. (39 phrases)

Case Study 1: AI in Cancer Care (Saving Lives) Forbes tales how AI revolutionizes most cancers treatment, enhancing outcomes and therefore as a result — honestly affordability. In 2025, methods like these from PathAI analyze pathology slides with superhuman accuracy, lowering misdiagnoses by 20%. A hospital in California carried out this, saving 15% on prices and therefore as a result — honestly detecting 30% additional early-stage circumstances.

Case Study 2: Disaster Response with AI (Saving Lives) Texas A&M’s CLARKE turns drone footage into injury maps in minutes, deployed after the 2025 floods. This enabled rescuers to find survivors sooner, slicing response time by 50% and therefore as a result — honestly saving an estimated 200 lives in one occasion.

Case Study 3: AI Biases in Mental Health (Risking Lives) Stanford’s evaluation warns AI remedy chatbots might reinforce stigma or so so so give harmful suggestion. In 2025, a broadly used app misadvised prospects, ensuing in elevated suicide dangers amongst minorities as a outcomes of biased educating information.

Case Study 4: Autonomous Weapons (Risking Lives) The Guardian particulars AI’s “Oppenheimer moment” with autonomous drones in battlefields. In a 2025 battle, swarms prompted unintended civilian casualties, highlighting escalation dangers.

Case Study 5: AI in Gene Therapy (Saving Lives) Stanford’s AI-powered CRISPR speeds therapies, doubtlessly saving lives from genetic illnesses. A trial in 2025 handled uncommon situations 40% sooner.

These circumstances illustrate AI’s transformative vitality and therefore as a result — honestly perils.

Common Mistakes to Avoid

Answer Box: Avoid frequent pitfalls in 2025 AI deployment like ignoring information biases, skipping moral audits, over-relying on automation with out human checks, and therefore as a result — honestly neglecting privateness, which might flip life-saving gadgets into hazards.

- Mistake 1: Overlooking Bias: Training on skewed information outcomes in discriminatory outcomes—e.g., AI favoring optimistic demographics in diagnostics. Solution: Diverse datasets.

- Mistake 2: No Human Oversight: Fully autonomous methods hazard errors in high-stakes eventualities. Always embody veto vitality.

- Mistake 3: Poor Data Security: 47% of organizations confronted AI incidents. Use encryption.

- Mistake 4: Ignoring Regulations: Lack of compliance might finish up in fines. Stay up as much as now with the EU AI Act.

- Mistake 5: Scaling Too Fast: Pilot first to arrange flaws.

✅ Verified Pro Tip: Conduct frequent bias audits utilizing frameworks like DECIDE-AI to catch components early.

Tools & Resources

Answer Box: Essential 2025 gadgets embody IBM Watson for healthcare, Spectee Pro for catastrophe response, and therefore as a result — honestly MIT’s AI Risk Repository for hazard evaluation, in addition to belongings resembling Stanford HAI reports for moral steering.

- Healthcare Tools: PathAI for diagnostics, Google DeepMind for predictions.

- Disaster Tools: AIDR for social media evaluation, Texas A&M CLARKE.

- Risk Mitigation: PROBAST for bias checks, AI Fairness 360 toolkit.

Resources:

- Stanford AI Index 2025

- MIT AI Ethics Guidelines

- Forbes AI Trends Reports

📈 Resource Table

| Tool/Resource | Purpose | Source |

|---|---|---|

| IBM Watson | Predictive healthcare | IBM |

| Spectee Pro | Disaster monitoring | Spectee |

| AI Risk Repository | Risk categorization | MIT |

Future Outlook

Answer Box: By 2030, AI might save billions in healthcare prices nonetheless faces dangers from superior autonomous methods; depend on stricter world authorized tips and therefore as a result — honestly hybrid human-AI models for safer integration in 2025 onward. (32 phrases)

Looking forward, AI’s trajectory is optimistic nevertheless cautious. The market hits $110B by 2030, with collaborative brokers rising. Risks like deepfakes surge, nonetheless frameworks will evolve. Hybrid methods, mixing AI velocity with human ethics, will dominate.

Anchor Sentence: In 2025, 61% of worldwide adults oppose fully autonomous weapons, per Ipsos surveys. (Stanford, 2025)

Anchor Sentence: Generative AI market in healthcare exceeds $10B by 2030, nonetheless biases preserve a main concern. (Forbes, 2025)

People Also Ask (PAA):

- How does AI save lives in healthcare?

- What are the largest AI risks in 2025?

- Can AI biases be mounted?

- Are autonomous weapons banned?

- What’s the best way in which forward for AI in disasters?

- How to implement moral AI?

- AI’s impact on jobs?

FAQ

u003cstrongu003eHow is AI saving lives in healthcare in 2025?u003c/strongu003e

AI enables early detection, with tools achieving 87.3% accuracy in reports, reducing errors, and personalizing treatments.

u003cstrongu003eWhat risks does AI pose to lives?u003c/strongu003e

Biases, weaponization, and misinformation top the list, potentially harming vulnerable groups.

u003cstrongu003eHow to mitigate AI biases?u003c/strongu003e

Through audits, diverse data, and frameworks like SHIFT.

u003cstrongu003eAre autonomous weapons a reality in 2025?u003c/strongu003e

Yes, with drone swarms in use, raising the escalation.

u003cstrongu003eWhat’s AI’s role in disaster response?u003c/strongu003e

Real-time analysis saves lives by mapping damage quickly.

u003cstrongu003eHow can organizations adopt ethical AI?u003c/strongu003e

Follow step-by-step guides emphasizing oversight and audits.

u003cstrongu003eWill AI replace human jobs in life-critical areas?u003c/strongu003e

It augments, but risks job impacts; 57% public is concerned.

Conclusion

In wrapping up, real-life AI in 2025 holds immense promise for saving a giant quantity of lives by method of groundbreaking innovation in healthcare and therefore as a result — honestly plenty of — really numerous necessary fields earlier drugs. However, this unbelievable potential furthermore requires cautious vigilance and therefore as a result — honestly proactive measures to defend in the course of diversified dangers and therefore as a result — honestly unintended penalties.

By thoughtfully embracing sturdy moral frameworks blended with fastened human oversight and therefore as a result — honestly accountability, we are, honestly in a place to harness the transformative vitality of AI responsibly and therefore as a result — honestly effectively. The future is exceptionally vivid if we act decisively and therefore as a result — honestly collaboratively right now—allow us to work collectively to assemble an AI that really serves and therefore as a result — honestly uplifts all of humanity.

Дополнительная информация: Подробнее на сайте

Дополнительная информация: Подробнее на сайте

Дополнительная информация: Подробнее на сайте