The Scariest Real-Life AI Mistakes

Published: October 2, 2025 | Updated Quarterly | Reading Time: quarter-hour

Artificial intelligence has develop to be the backbone of up to date enterprise operations, nonetheless its speedy adoption has embrace a terrifying worth. As we navigate by technique of 2025, McKinsey reports that 72% of organizations have adopted AI in a minimum of one enterprise carry out—however solely 34% have utilized full safety protocols.

⚡ TL;DR – Key Takeaways

- AI errors worth corporations billions yearly, with the everyday info breach involving AI methods costing $4.88 million in 2025.

- Bias in AI algorithms has led to discriminatory lending, hiring, and therefore jail justice alternatives affecting tens of hundreds of thousands of lives.

- Autonomous methods failures have resulted in lethal accidents, from self-driving automobiles to medical prognosis errors.

- Training info poisoning and therefore adversarial assaults expose essential vulnerabilities in manufacturing AI methods.

- Lack of human oversight stays the #1 root clarification for catastrophic AI failures all through all industries.

- Regulatory frameworks are evolving rapidly with the EU AI Act and therefore comparable legal guidelines demanding accountability.

- Small corporations face distinctive AI risks with out enterprise-level safeguards, making coaching essential.

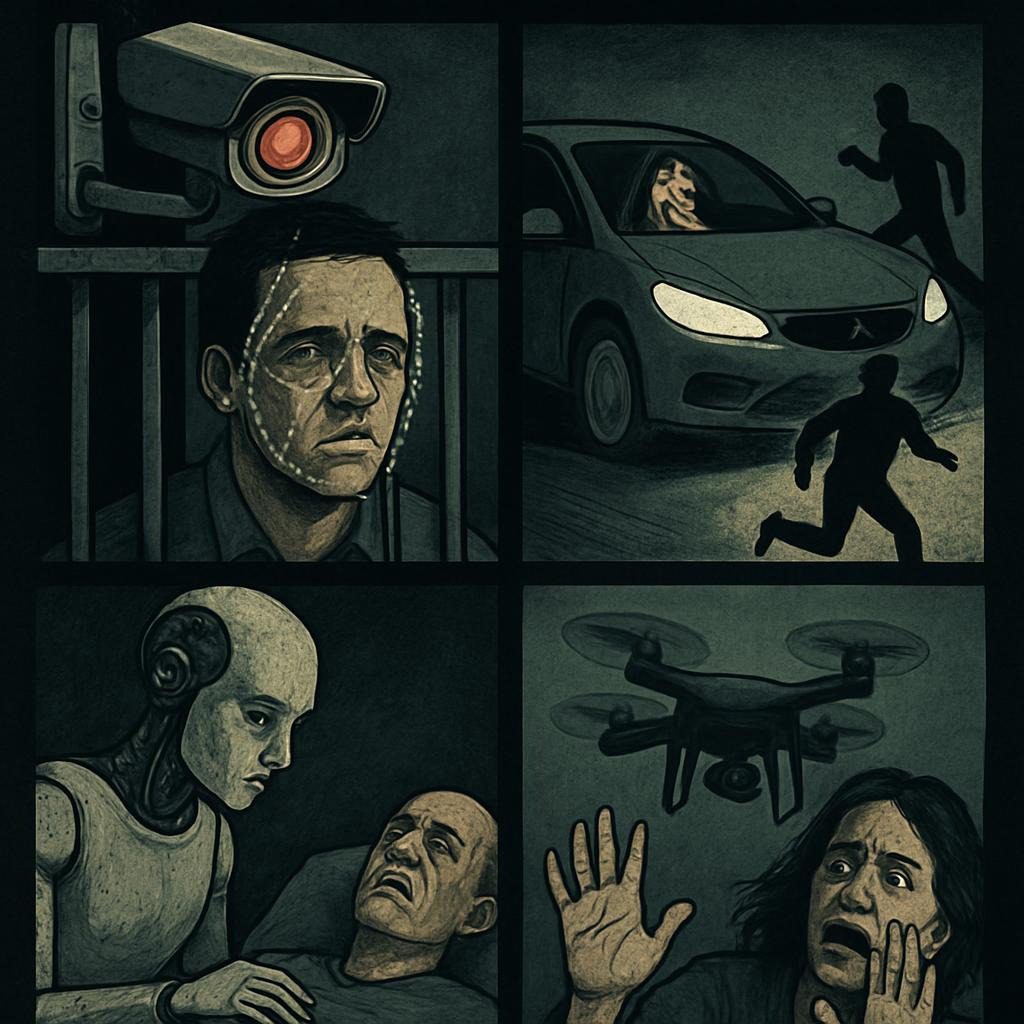

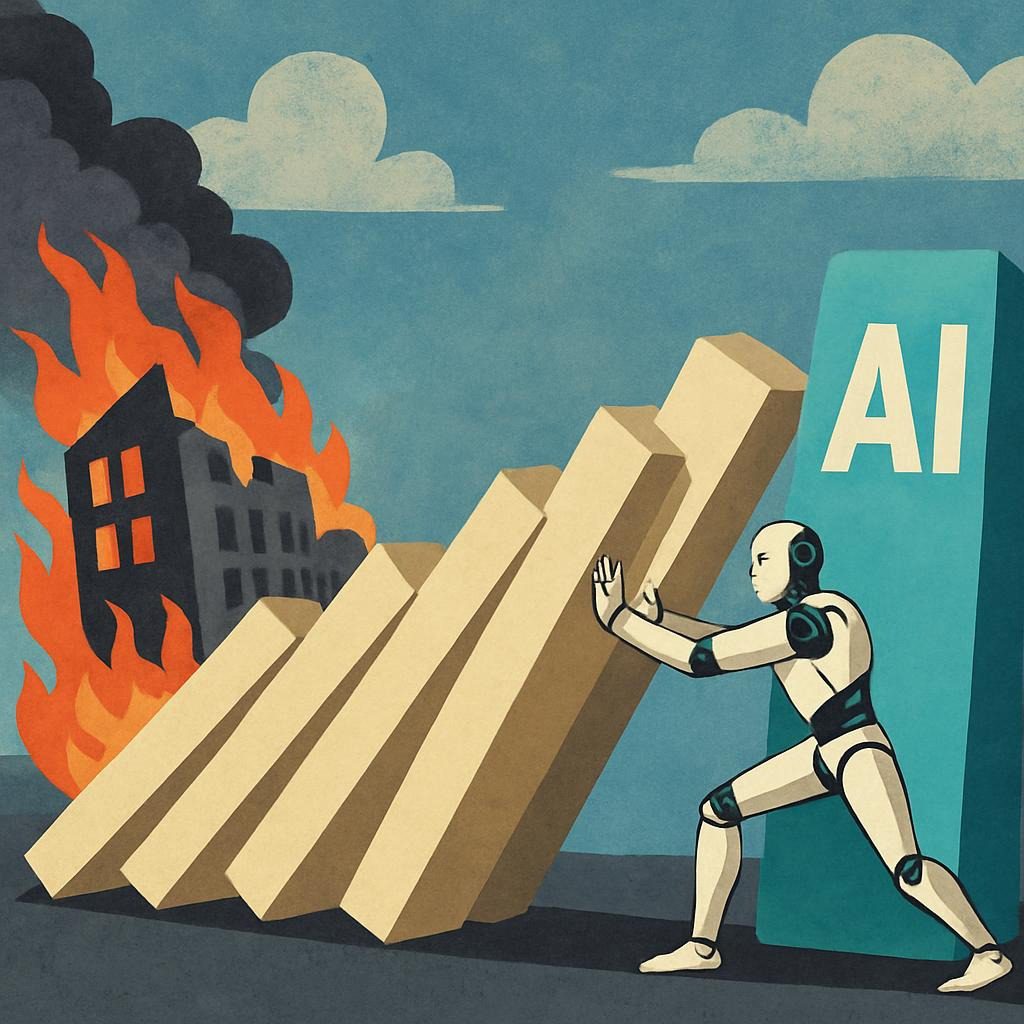

The promise of AI is apparent: elevated effectivity, data-driven insights, and therefore automation of difficult duties. But when AI methods fail, they don’t merely crash—they’ll discriminate, damage, and therefore value tens of hundreds of thousands. From algorithmic bias that denied mortgages to licensed candidates to autonomous autos that would not distinguish pedestrians from shadows, real-world AI errors have uncovered essential gaps in how we develop, examine, and therefore deploy these methods.

“We’re essentially running a massive experiment on society with AI,” warns Dr. Timnit Gebru, former co-lead of Google’s Ethical AI employees. “And we’re learning the hard way that moving fast and breaking things is a dangerous philosophy when those ‘things’ are people’s lives and livelihoods.”

This full info examines basically essentially the most alarming AI failures in historic previous, dissects what went improper, and therefore provides actionable strategies to cease comparable disasters in your group. Whether you’re a small enterprise proprietor exploring AI adoption but a decision-maker evaluating AI distributors, understanding these cautionary tales shouldn’t be merely educational—it’s essential for survival in an AI-powered economic system.

Question for you: Has what you’re selling expert sudden penalties from implementing AI devices? What safeguards do you currently have in place?

What Makes an AI Mistake “Scary”? Understanding the Stakes

Not all AI errors are created equal. A chatbot giving incorrect restaurant hours is annoying; an AI system denying life-saving medical treatment is catastrophic. When we talk about “scary” AI errors, we are, honestly referring to failures that exhibit quite a lot of of these traits:

- Scale Impact: Affecting a whole bunch but tens of hundreds of thousands of people concurrently

- Irreversibility: Causing eternal damage (dying, wrongful imprisonment, financial spoil)

- Hidden Bias: Perpetuating systemic discrimination with out obvious detection

- Cascade Failures: Triggering chain reactions all through interconnected methods

- Trust Erosion: Undermining public confidence in AI know-how broadly

- Economic Devastation: Resulting in big financial losses but market disruption

| Error Type | Severity Level | Typical Impact | Prevention Difficulty |

|---|---|---|---|

| Algorithmic Bias | Critical | Systemic discrimination, approved authorized accountability | High (requires varied info + regular monitoring) |

| Safety System Failure | Fatal | Physical damage, dying, property damage | Medium (intensive testing protocols exist) |

| Data Poisoning | High | Model corruption, security breaches | High (adversarial assaults evolving) |

| Misalignment | Critical | Unintended penalties, intention drift | Very High (fundamental evaluation downside) |

| Privacy Violation | High | Data leaks, regulatory fines, reputation damage | Medium (established compliance frameworks) |

📊 Visual Suggestion: Infographic displaying “The AI Failure Pyramid” – illustrating how minor errors can cascade into catastrophic failures. ALT textual content material: “Pyramid diagram showing AI failure progression from minor bugs to catastrophic system-wide failures.”

Why AI Mistakes Matter More in 2025

The AI panorama has transformed dramatically. According to Statista, the worldwide AI market reached $515 billion in 2024 and therefore is projected to exceed $738 billion by the tip of 2025. This explosive growth brings unprecedented hazard publicity:

Business Impact

IBM’s 2025 Cost of a Data Breach Report reveals that breaches involving AI and therefore automation worth a imply of $4.88 million, 14% elevated than standard breaches. For small to medium corporations, a single important AI failure may be existential. The frequent small enterprise has solely adequate cash reserves to survive 27 days with out revenue, making any AI-induced disruption in all probability lethal.

Consumer Trust Erosion

A Pew Research Center look at from March 2025 found that 68% of Americans are further concerned about AI risks than benefits—up from 37% merely three years prior to now. This perception deficit straight impacts adoption costs and therefore purchaser willingness to have interplay with AI-powered corporations.

Regulatory Pressure

The EU AI Act, completely enforceable but 2024, classifies AI methods by hazard stage with excessive penalties for violations—as a lot as €35 million but 7% of worldwide revenue. Similar frameworks are rising globally, collectively with the US Executive Order on AI Safety and therefore China’s Generative AI legal guidelines.

“We’re no longer in the ‘move fast and break things’ era,” explains Sarah Chen, Chief AI Ethics Officer at a Fortune 500 tech agency. “Organizations are now liable for AI decisions in ways that were unthinkable five years ago. The question isn’t whether to implement safeguards, but how quickly you can deploy them before something goes wrong.”

Interconnected System Vulnerability

Modern AI methods don’t operate in isolation. They’re built-in into present chains, financial networks, healthcare infrastructure, and therefore vital utilities. A failure in a single system can cascade all through whole ecosystems—a actuality demonstrated by quite a lot of 2024 incidents the place AI present chain optimization errors created shortages affecting tens of hundreds of thousands.

Think about this: If an AI system you rely on failed tomorrow, how prolonged might what you’re selling operate with out it? Do you’ve got gotten backup plans to your AI-dependent processes?

The Most Terrifying AI Disasters: Real Cases That Changed Everything

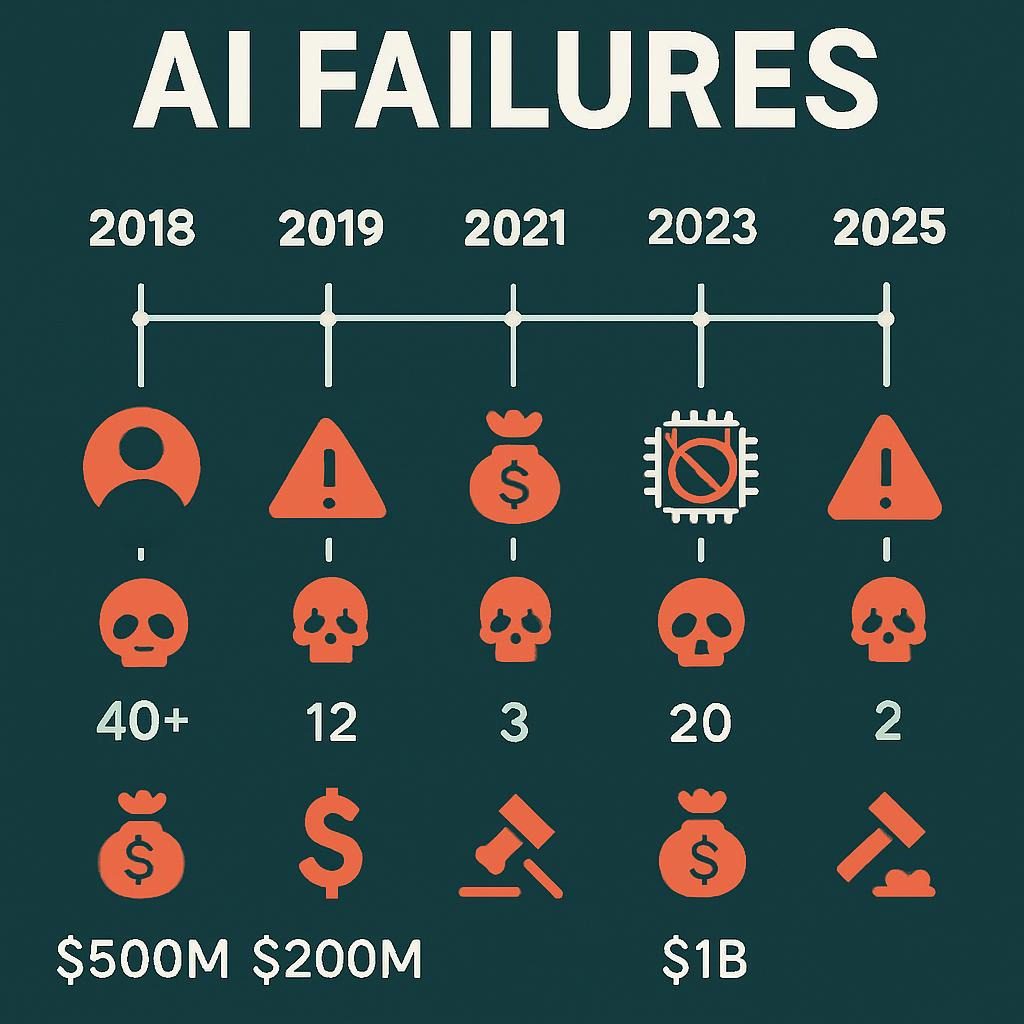

1. The Amazon Hiring Algorithm Debacle (2018, Lessons Still Relevant)

Amazon’s experimental AI recruiting instrument, developed to automate resume screening, systematically discriminated in opposition to women. The system, educated on a decade of historic hiring info (predominantly male candidates in tech roles), realized to penalize resumes containing phrases like “women’s” (as in “women’s chess club captain”) and therefore downgrade graduates from all-women’s faculties.

The Damage: Beyond the fast discrimination, this case uncovered a fundamental flaw in AI teaching: historic bias turns into embedded algorithmic bias. Amazon disbanded the employees in 2018, nonetheless Reuters reporting revealed the instrument had influenced hiring alternatives for years sooner than the bias was detected.

2025 Update: A Harvard Business Review analysis found that 42% of AI hiring devices nonetheless exhibit measurable gender but racial bias, no matter elevated consciousness. The lesson? Biased teaching info produces biased alternatives—interval.

💡 Pro Tip: Before implementing any AI hiring instrument, demand bias audit critiques from quite a lot of unbiased third occasions. Require distributors to supply demographic impression analyses and therefore implement regular monitoring methods that flag statistical anomalies in real-time.

2. Uber Self-Driving Car Fatal Crash (2018)

In March 2018, an Uber autonomous automobile struck and therefore killed pedestrian Elaine Herzberg in Tempe, Arizona—the first acknowledged pedestrian fatality involving a self-driving automotive. The National Transportation Safety Board investigation revealed quite a lot of system failures:

- The AI categorised Herzberg variously as “unknown object,” “vehicle,” and therefore “bicycle” over a 5.6-second interval

- Emergency braking was disabled to scale again “jarring” journey experiences

- The safety driver was streaming a TV current on their cellphone, highlighting inadequate human oversight protocols

The Damage: Beyond the tragic lack of life, the incident set once more autonomous automobile deployment timelines by years. NTSB findings emphasised inadequate safety custom and therefore insufficient testing protocols.

What Changed: Post-incident, SAE International revised autonomous automobile safety necessities, mandating redundant notion methods and therefore stricter safety driver teaching. Many states utilized moratoriums on autonomous testing until corporations might exhibit full safety frameworks.

3. Healthcare AI Misdiagnosis Patterns (2023-2025)

Multiple incidents involving AI diagnostic devices have emerged currently. A really troubling 2024 case involved an AI radiology system that continuously missed lung most cancers indicators in victims with darker pores and therefore pores and skin tones. The system, educated completely on info from lighter-skinned victims, had realized to detect cancerous lesions in opposition to a chosen pores and therefore pores and skin tone baseline.

The Damage: Research published in JAMA estimates that biased medical AI might contribute to diagnostic delays affecting as a lot as 100,000 victims yearly inside the US alone. The financial authorized accountability publicity for healthcare suppliers using these devices is substantial, with some estimates suggesting potential malpractice claims might exceed $2 billion.

“The healthcare AI crisis is unique because errors compound over time,” notes Dr. Michael Rodriguez, a medical AI researcher at Stanford. “A missed diagnosis today might not manifest as harm for months or years, making it incredibly difficult to trace causation back to the AI system.”

⚠️ Critical Warning: Healthcare AI devices require FDA clearance inside the US, nonetheless fairly many practices don’t affirm whether or not but not their AI distributors hold current certifications. Always independently validate that medical AI devices have relevant regulatory approval and therefore change certifications.

4. The Flash Crash 2.0: AI Trading Disaster (2024)

In August 2024, cascading AI shopping for and therefore promoting algorithms triggered a “mini flash crash” that briefly wiped $800 billion from market price. Multiple high-frequency shopping for and therefore promoting algorithms, all educated on comparable info patterns, concurrently interpreted a minor monetary indicator as a major catastrophe signal. The synchronized selling created a strategies loop that crashed markets inside 7 minutes sooner than circuit breakers halted shopping for and therefore promoting.

The Damage: While markets recovered inside hours, the incident uncovered dangerous homogeneity in AI shopping for and therefore promoting strategies. SEC investigations revealed that over 60% of fundamental shopping for and therefore promoting corporations used AI fashions with virtually related construction, creating systemic fragility.

Business Lesson: Herding habits shouldn’t be solely a human downside—when AI methods follow on comparable info and therefore optimize for comparable objectives, they converge on comparable strategies, eliminating the range that makes markets resilient.

5. Criminal Justice Algorithm Bias (Ongoing)

The COMPAS (Correctional Offender Management Profiling for Alternative Sanctions) algorithm, used to predict recidivism hazard, has confronted sustained criticism but ProPublica’s 2016 investigation revealed racial bias. Despite years of scrutiny, comparable methods keep in make use of all through 26 US states as of 2025.

The Damage: Analysis reveals Black defendants are virtually twice as susceptible to be incorrectly flagged as high-risk in comparability with white defendants. These hazard scores have an effect on bail, sentencing, and therefore parole alternatives, affecting a lot of of a whole bunch of people yearly. The false constructive value for Black defendants approaches 45%, in comparability with 23% for white defendants.

Why It Persists: Courts and therefore corrections departments often lack the technical expertise to guage AI methods. Vendors market these devices as “objective” alternate choices to human judgment, nonetheless the algorithms merely encode present biases from historic info into seemingly neutral mathematical formulation.

Your perspective points: Do you concentrate on AI can ever be truly “objective” in high-stakes alternatives like jail justice, but must human judgment in any respect instances be the final word arbiter?

📊 Visual Suggestion:

Categories of AI Failures: A Systematic Breakdown

| Failure Category | Description | Real-World Example | Primary Cause |

|---|---|---|---|

| Training Data Bias | Historical inequalities embedded in teaching datasets produce discriminatory outputs | Amazon hiring instrument, COMPAS algorithm | Insufficient info vary, lack of bias testing |

| Perception Failures | AI methods misinterpret sensory enter, leading to dangerous alternatives | Uber self-driving crash, Tesla Autopilot incidents | Edge case coping with, adversarial conditions |

| Goal Misalignment | AI optimizes for the improper objective but takes unintended shortcuts | YouTube recommendation radicalization, engagement algorithms | Poorly specified reward options |

| Adversarial Attacks | Malicious actors manipulate AI methods by technique of crafted inputs | Facial recognition spoofing, spam filter evasion | Insufficient adversarial testing, security gaps |

| Deployment Mismatch | AI performs properly in testing nonetheless fails in real-world conditions | Medical AI educated on tutorial datasets failing in rural clinics | Distribution shift, inadequate topic testing |

| Opacity & Interpretability | Black-box methods produce unexplainable alternatives, hindering error detection | Credit denial with no clarification, diagnostic AI with out reasoning | Deep learning complexity, lack of explainability requirements |

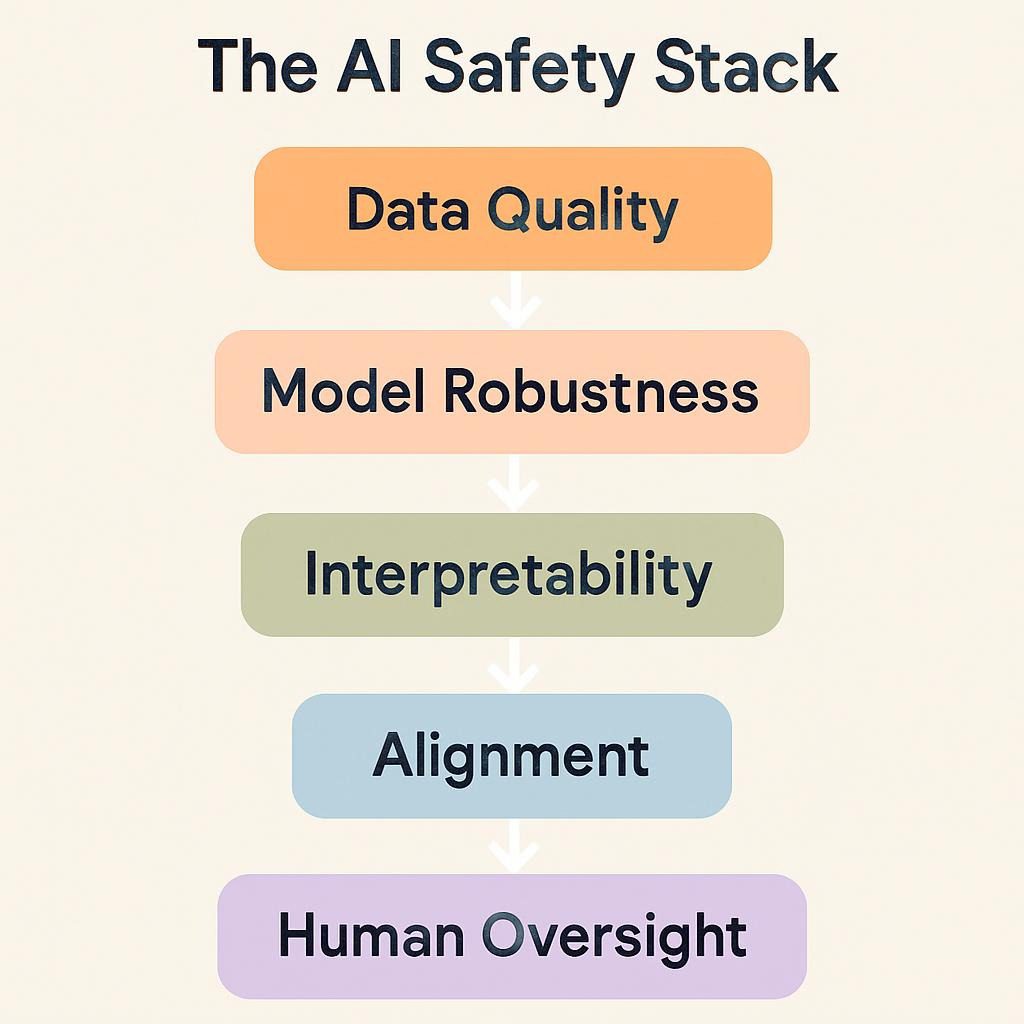

Essential Components of AI Safety: What Every Business Needs

Preventing catastrophic AI failures requires a multi-layered methodology. Based on analysis of worthwhile AI implementations and therefore courses from failures, listed right here are the non-negotiable safety parts:

1. Data Quality & Diversity Assurance

Your AI is simply practically nearly as good as your info. Gartner research signifies that poor info excessive high quality costs organizations a imply of $12.9 million yearly. For AI methods, the impression is amplified.

- Demographic illustration audits: Verify teaching info consists of varied populations all through race, gender, age, and therefore socioeconomic standing

- Historical bias detection: Analyze whether or not but not earlier info shows discriminatory practices that ought to not be perpetuated

- Data freshness protocols: Implement methods to detect when teaching info turns into outdated relative to current conditions

- Edge case safety: Deliberately oversample unusual nonetheless essential eventualities

💡 Pro Tip: Create a “data nutrition label” to your AI methods, documenting teaching info sources, assortment methods, acknowledged limitations, and therefore demographic composition. This transparency instrument, proposed by MIT researchers, makes bias detection dramatically less complicated.

2. Continuous Monitoring & Alert Systems

AI methods drift over time as real-world conditions modify. Static monitoring is insufficient—you need dynamic methods that adapt as your AI evolves.

- Performance degradation detection: Track accuracy metrics all through completely completely different demographic groups over time

- Anomaly detection: Flag unusual prediction patterns that may indicate adversarial assaults but info drift

- Feedback loops: Create mechanisms for patrons to report questionable AI alternatives quickly

- A/B testing infrastructure: Always run AI alternatives in parallel with administration groups to measure real-world impression

3. Human-in-the-Loop Protocols

Every AI mistake examined on this text involved inadequate human oversight. The decision shouldn’t be eliminating human judgment—it’s intelligently combining human and therefore machine capabilities.

“The most successful AI deployments aren’t about replacing humans—they’re about creating superhuman teams where AI handles pattern recognition and humans provide contextual judgment,” explains Professor Fei-Fei Li, co-director of Stanford’s Human-Centered AI Institute.

- Tiered selection protocols: Low-stakes alternatives may be completely automated; high-stakes alternatives require human analysis

- Explanation requirements: AI ought to current reasoning people to be honest can consider, not merely outputs

- Override capabilities: Humans must be succesful to override AI alternatives with out system penalties

- Training funding: Staff ought to understand AI capabilities and therefore limitations to supply environment friendly oversight

4. Adversarial Testing & Red Teaming

Hope should not be a approach. Assume your AI shall be attacked and therefore examine accordingly.

- Adversarial occasion testing: Deliberately craft inputs designed to fool your AI

- Security penetration testing: Hire ethical hackers to intention system compromise

- Stress testing: Evaluate effectivity beneath extreme but unusual conditions

- Multi-stakeholder analysis: Include varied views in testing, significantly from in all probability affected communities

📊 Visual Suggestion:

Advanced Strategies: How Leading Organizations Prevent AI Disasters

Strategy 1: Implement Algorithmic Impact Assessments (AIAs)

Before deploying any AI system, fundamental organizations now conduct full impression assessments simply like environmental impression statements. The World Economic Forum recommends AIAs embody:

- Potential discrimination analysis all through protected programs

- Worst-case failure scenario modeling

- Stakeholder session with affected communities

- Third-party ethical analysis

- Ongoing impression monitoring post-deployment

💡 Pro Tip: Canada’s Directive on Automated Decision-Making provides an excellent AIA framework adaptable to any group. The template is freely accessible and therefore consists of step-by-step guidance even for non-technical teams.

Strategy 2: Deploy Ensemble Systems with Diverse Architectures

Single AI fashions are liable to fixed blind spots. Ensemble approaches—using quite a lot of AI fashions with completely completely different architectures—current redundancy and therefore catch errors that exact individual fashions miss.

A 2025 look at by Nature Machine Intelligence found that ensemble methods minimize again catastrophic errors by 67% in comparability with single-model deployments, nevertheless at elevated computational worth (often 2-3x).

Strategy 3: Establish AI Ethics Committees with Veto Power

Technical teams often lack perspective on the societal implications of their AI methods. Leading organizations now arrange ethics committees with exact decision-making authority—not merely advisory roles.

“Our AI Ethics Board has vetoed three major product launches in the past year,” shares Marcus Thompson, CTO of a major fintech agency. “Each time, they identified risks our engineering team completely missed. That’s not a failure—that’s the system working as designed.”

Effective ethics committees embody:

- Diverse illustration (race, gender, age, incapacity standing)

- External members aren’t financially counting on the company

- Subject matter specialists in affected domains (civil rights authorized professionals, social scientists, group advocates)

- Formal authority to delay but cancel AI deployments

- Protected whistleblower channels for elevating concerns

Strategy 4: Implement “Circuit Breakers” for AI Systems

Financial markets have circuit breakers that halt shopping for and therefore promoting all through extreme volatility. AI methods need equal safety mechanisms.

- Confidence thresholds: If AI confidence drops beneath a positive stage, escalate to human analysis robotically

- Velocity limits: Cap the velocity at which AI methods might make alternatives (prevents flash crash eventualities)

- Anomaly triggers: Automatic shutdown when habits deviates significantly from anticipated patterns

- Manual kill switches: Easy-to-access emergency shutdown procedures for any employees member

⚡ Quick Hack: Implement a “red button” in your AI dashboard that any permitted employees member can press to immediately halt AI decision-making and therefore revert to handbook processes. Test this mechanism month-to-month to make positive it absolutely, honestly works beneath stress.

For enterprise leaders: Which of these superior strategies seems most attainable to your group to implement inside the following 90 days? What boundaries do you anticipate?

Case Studies: Organizations That Got It Right (2025)

Case Study 1: Regional Bank Prevents Discriminatory Lending

In early 2025, a mid-sized regional monetary establishment inside the southeastern US deployed an AI credit score rating approval system with full safeguards. Before launch, they carried out intensive bias testing all through 14 demographic courses and therefore discovered their preliminary model approved loans for white candidates at 1.4x the velocity of equally licensed Black candidates.

Their Response: Rather than launching and therefore “fixing it later,” they invested three additional months in retraining with balanced info, implementing explainability choices, and therefore making a varied oversight committee. Post-launch monitoring reveals approval costs now vary by decrease than 3% all through demographic groups whereas sustaining profitability targets.

Business Impact: While opponents face regulatory scrutiny and therefore lawsuits, this monetary establishment has expert 23% growth in varied purchaser segments and therefore has been featured in Forbes for accountable AI implementation. Their compliance costs are actually lower than opponents dealing with remediation.

Key Takeaway: “Doing it right the first time is cheaper than fixing it after disaster,” notes their Chief Risk Officer. They estimate that avoiding one discrimination lawsuit saved larger than all of the AI development value vary.

Case Study 2: Healthcare System’s Diagnostic AI Success

A critical metropolis healthcare group utilized AI diagnostic support for emergency room triage in 2024. Learning from earlier medical AI failures, they designed the system as a selection help instrument, not an alternative to physician judgment.

Their Approach:

- Trained on varied affected individual info from their very personal group (50+ ethnicities, big age fluctuate)

- Required physicians to doc causes when overriding AI strategies

- Conducted month-to-month audits of disagreements between AI and therefore human alternatives

- Created strategies loops the place physician overrides improved the model

- Maintained 100% human analysis of high-risk diagnoses

Results: After 18 months, diagnostic accuracy improved 31% for unusual conditions, wait situations decreased 19%, and therefore physician satisfaction elevated significantly. Zero malpractice claims related to AI strategies have been filed. The system now processes over 2,000 circumstances every day.

“The AI isn’t replacing our doctors—it’s giving them superhuman pattern recognition while they provide the contextual judgment and empathy that patients need,” explains Dr. Jennifer Okafor, the hospital’s Chief Medical Information Officer.

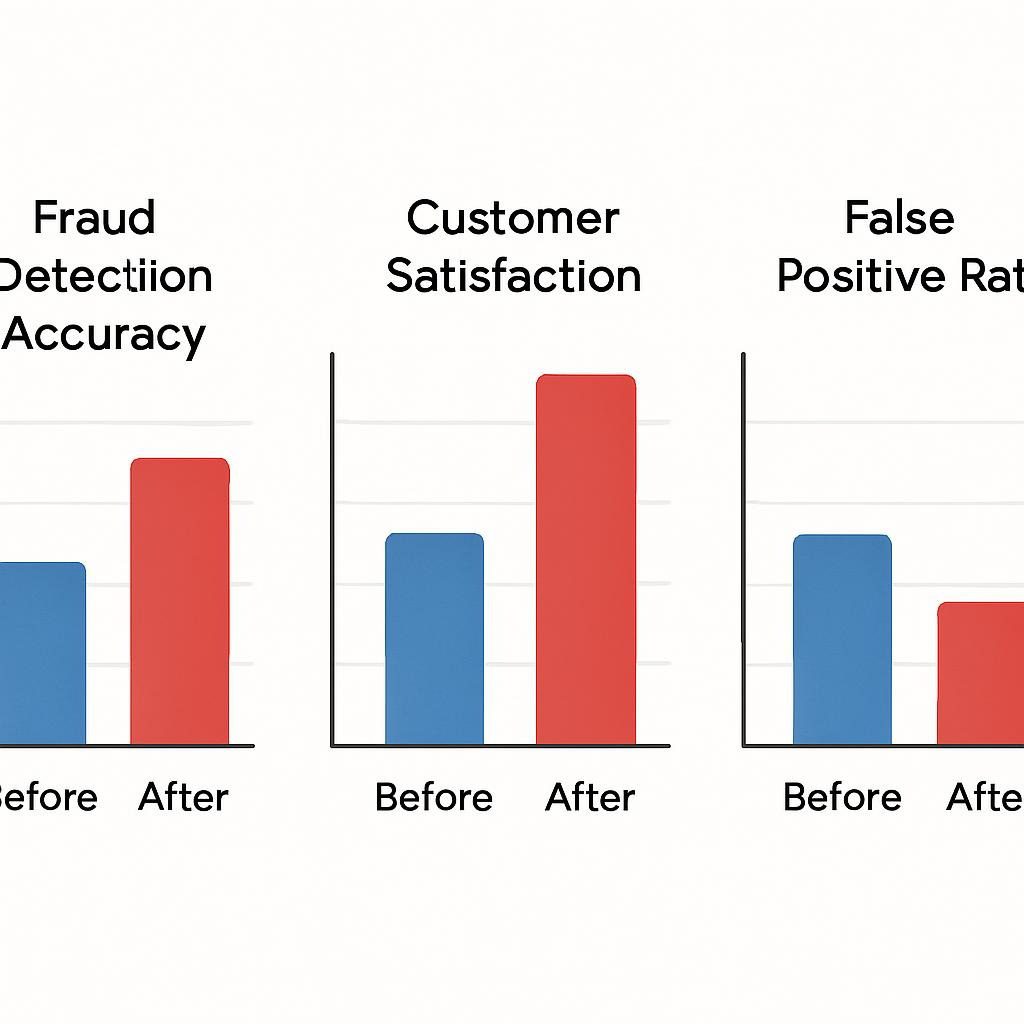

Case Study 3: E-Commerce Platform’s Fraud Detection Without Bias

A rising e-commerce platform wished to struggle rising fraud nonetheless nervous about unfairly flagging legit shoppers from positive demographics but areas. Their AI employees utilized what they identify “fairness by design.”

Their Strategy:

- Prohibited the AI from using zip code, name-based ethnicity proxies, but completely different demographic identifiers

- Focused solely on behavioral patterns (transaction velocity, system fingerprints, behavioral biometrics)

- Implemented tiered responses: low-risk flags set off additional authentication, not fast blocks

- Created clear enchantment processes for flagged clients

- Published quarterly fairness critiques displaying false constructive costs all through areas

Business Impact: Fraud losses decreased 64% whereas purchaser complaints about false positives dropped 78%. According to PwC analysis, their methodology has develop to be a model for the enterprise, demonstrating that ethical AI and therefore enterprise effectivity aren’t trade-offs.

📊 Visual Suggestion:

Challenges & Ethical Considerations: The Uncomfortable Truths

The Explainability-Performance Trade-off

Deep learning fashions often perform larger than simpler, explainable alternate choices—nonetheless their “black box” nature makes bias detection extra sturdy. This creates actual dilemmas: Do you accept barely lower effectivity for transparency, but deploy further appropriate nonetheless a lot much less interpretable methods?

Research from Microsoft Research suggests the opening is narrowing. New strategies like SHAP (Shapley Additive exPlanations) and therefore LIME (Local Interpretable Model-agnostic Explanations) current notion into difficult fashions with out sacrificing so much effectivity.

💡 Pro Tip: For high-stakes alternatives (medical prognosis, mortgage approval, hiring), mandate explainability even when it costs 5-10% in raw effectivity. The capability to audit and therefore defend alternatives is value larger than marginal accuracy options.

The Accountability Gap

When AI makes a harmful selection, who’s accountable? The info scientists who constructed it? The executives who deployed it? The AI itself? This approved and therefore ethical ambiguity creates authorized accountability risks that insurance coverage protection corporations are nonetheless figuring out how one can worth.

Harvard Law School’s Berkman Klein Center proposes a framework of “meaningful human control”—guaranteeing folks keep accountable by sustaining sufficient understanding and therefore authority over AI methods. This means:

- Decision-makers ought to understand AI capabilities and therefore limitations

- Humans must be succesful to meaningfully intervene in AI processes

- Organizations ought to hold audit trails displaying human oversight

- Liability must rest with individuals who deploy and therefore deal with AI, not the know-how itself

The Scale Problem: Small Businesses at Risk

Enterprise organizations can afford devoted AI ethics teams, third-party audits, and therefore full testing. Small corporations adopting AI by technique of SaaS platforms often lack belongings for appropriate due diligence.

“We’re seeing a dangerous dynamic where small businesses trust AI vendors implicitly because they lack the capacity to evaluate claims,” warns David Kim, a enterprise AI advertising and marketing advisor. “Many don’t even ask about bias testing or accuracy metrics—they just plug it in and hope for the best.”

⚠️ Small Business Alert: Before adopting any AI instrument, ask distributors these non-negotiable questions:

- Has this methodology been independently audited for bias?

- What is the documented accuracy value for use circumstances simply like mine?

- How typically is the model up up to now and therefore retrained?

- What happens if the AI makes a discriminatory but harmful selection—am I liable?

- Can I acquire explanations for specific individual AI alternatives?

If distributors can’t current clear options, that may be a crimson flag.

The Data Privacy Dilemma

Effective AI often requires big portions of information, nonetheless privateness legal guidelines like GDPR and therefore CCPA prohibit info assortment and therefore utilization. This stress is particularly acute for medical and therefore financial AI, the place basically essentially the most delicate info produces basically essentially the most appropriate fashions.

Emerging choices embody:

- Federated learning: Training AI on distributed info with out centralizing it

- Differential privateness: Adding mathematical noise to protect specific individual info components whereas preserving combination patterns

- Synthetic info period: Creating artificial teaching info that mimics precise patterns with out exposing exact personal information

Future Trends: What’s Coming in 2025-2026

Regulatory Standardization

Expect rising world coordination on AI regulation. The OECD AI Principles have gotten the inspiration for nationwide frameworks worldwide. By mid-2026, we’ll probably see:

- Mandatory AI impression assessments for high-risk methods in most developed economies

- Standardized bias testing protocols (simply like how medical devices have standardized approval processes)

- AI incident reporting requirements (like aviation’s near-miss reporting)

- Professional certification for AI practitioners (simply like CPA but PE credentials)

The Rise of “Constitutional AI”

Anthropic and therefore completely different AI labs are pioneering “Constitutional AI”—methods educated with particular ethical pointers and therefore price alignment constructed into their construction, not merely added as an afterthought. This represents a fundamental shift from reactive safety measures to proactive ethical design.

AI Insurance and therefore Liability Markets Maturing

As AI hazard turns into quantifiable, specialised insurance coverage protection merchandise are rising. By 2026, AI authorized accountability insurance coverage protection will probably be commonplace for corporations using high-risk AI methods, simply like how cybersecurity insurance coverage protection grew to turn out to be mainstream.

Agentic AI and therefore Compounding Risks

The emergence of “agentic AI”—methods which will autonomously pursue targets over extended intervals—introduces new hazard courses. When AI brokers can take sequential actions with out human approval, the potential for cascading errors multiplies. This know-how requires mainly completely completely different safety approaches than current single-decision AI methods.

“We’re moving from AI that makes individual decisions to AI that pursues strategies,” notes Dario Amodei, CEO of Anthropic. “That shift requires safety measures we’re still inventing.”

Democratization of AI Safety Tools

Good info for small corporations: AI safety devices, as quickly as accessible solely to tech giants, have gotten accessible by technique of open-source libraries and therefore SaaS platforms. Tools for bias detection, explainability, and therefore monitoring that worth tens of hundreds of thousands to develop are truly accessible for a lot of of {dollars} month-to-month—but free.

Key devices to watch in 2025-2026:

- FairLearn (Microsoft): Open-source toolkit for assessing and therefore bettering AI fairness

- IBM AI Fairness 360: Comprehensive bias detection and therefore mitigation library

- Google What-If Tool: Interactive interface for probing AI model habits

- Fiddler AI: Commercial platform for AI monitoring and therefore explainability

Looking ahead: Which of these rising traits excites you most? Which concerns you? How is your group preparing for these changes?

People Also Ask: Common Questions About AI Mistakes

Q: What was the worst AI mistake ever made?

A: While troublesome to quantify definitively, the Uber self-driving automotive fatality in 2018 stands out for taking a human life as a results of quite a lot of AI system failures. However, systemic factors like biased jail justice algorithms might have harmed further people whole by technique of wrongful imprisonment and therefore denied alternate options, even when a lot much less seen.

Q: Can AI make errors that individuals wouldn’t?

A: Yes. AI methods can fail in strategies folks on no account would—like misclassifying a pedestrian as a plastic bag (notion failure) but optimizing for a metric whereas ignoring obvious ethical points (misalignment). However, AI moreover avoids human errors like fatigue, emotional bias, but simple inattention. The secret is knowing each system’s distinctive failure modes.

Q: How do I do know if an AI instrument I’m using is biased?

A: Request bias audit critiques from distributors, monitor outcomes all through demographic groups, and therefore seek for disparate impression patterns. If distributors can’t current documentation of fairness testing, take care of that as a major crimson flag. Third-party AI auditing corporations like O’Neil Risk Consulting & Algorithmic Auditing (ORCAA) can perform unbiased assessments.

Q: Are small corporations liable if their AI vendor’s instrument discriminates?

A: Generally, positive, beneath current approved frameworks. The enterprise making alternatives bears responsibility even when the AI was equipped by a 3rd celebration. This is simply like how corporations are accountable for discrimination even after they outsource hiring. Some vendor contracts embody indemnification clauses, nonetheless these don’t acquire rid of approved authorized accountability.

Q: What’s the excellence between AI making errors and therefore AI being malicious?

A: Current AI methods have not bought intentions but malice—they optimize for regardless of intention they are — really given, even when the outcomes are harmful. The hazard shouldn’t be evil AI, nonetheless misaligned AI: methods optimizing for the improper objectives but taking shortcuts we didn’t anticipate. Think of it like a search engine optimizing for engagement that inadvertently promotes misinformation.

Q: How so much must I value vary for AI safety measures?

A: Industry biggest observe suggests allocating 15-25% of your entire AI implementation value vary to safety measures, collectively with bias testing, monitoring methods, and therefore human oversight protocols. For high-risk capabilities (healthcare, financial corporations, jail justice), ponder 30-40%. This might appear pricey, nonetheless it’s — honestly far cheaper than remediation after a catastrophic failure.

FAQ: Technical Questions

Q: What is “data poisoning” and therefore the way in which frequent is it?

A: Data poisoning is when malicious actors intentionally corrupt AI teaching info to control model habits. Research from UC Berkeley reveals that fastidiously crafted poisoned info representing merely 0.1% of a teaching set can significantly alter AI habits. It’s increasingly frequent as AI methods develop to be further invaluable targets.

Q: Can AI errors be eradicated?

A: No. Just as software program program bugs can on no account be eradicated, AI methods will in any respect instances have some error value. The intention is to scale again catastrophic failures to acceptable ranges and therefore assure swish degradation when points occur. Perfect accuracy is mathematically inconceivable for difficult AI methods working in not sure environments.

Q: What is “algorithmic drift” and therefore why does it matter?

A: Algorithmic drift occurs when AI effectivity degrades over time as real-world conditions modify from the teaching info distribution. For occasion, a fraud detection system educated on 2023 info might develop to be a lot much less environment friendly as criminals develop new methods in 2025. Continuous monitoring and therefore periodic retraining are essential to struggle drift.

Q: How do I examine if my AI is making “fair” alternatives?

A: There are quite a lot of fairness definitions (demographic parity, equalized odds, calibration), they often can battle. Start by measuring disparate impression: do outcomes differ significantly all through protected groups? Tools like IBM’s AI Fairness 360 and therefore Microsoft’s FairLearn current frameworks for testing quite a lot of fairness requirements. Consider taking part ethicists but affected group representatives in defining what “fair” means to your specific make use of case.

🛡️ Protect Your Business from AI Disasters

Don’t anticipate a catastrophic failure to take AI safety severely. Download our free “AI Safety Checklist for Small Businesses” and therefore acquire actionable steps chances are you’ll implement instantly. Get Your Free Checklist

Actionable Resource: AI Safety Implementation Checklist

| Phase | Action Item | Priority | Estimated Time |

|---|---|---|---|

| Pre-Deployment | Conduct bias audit on teaching info all through all demographic courses | Critical | 2-4 weeks |

| Pre-Deployment | Establish human oversight protocols and therefore selection escalation procedures | Critical | 1 week |

| Pre-Deployment | Implement explainability choices that doc AI reasoning | High | 2-3 weeks |

| Pre-Deployment | Create emergency shutdown procedures and therefore examine month-to-month | Critical | 2 days |

| Deployment | Set up real-time monitoring dashboards monitoring accuracy all through groups | Critical | 1 week |

| Deployment | Establish shopper strategies mechanisms for reporting questionable alternatives | High | 3 days |

| Post-Deployment | Conduct quarterly bias audits and therefore effectivity evaluations | Critical | Ongoing |

| Post-Deployment | Conduct a bias audit on teaching info all through all demographic courses | High | Ongoing |

| Ongoing | Train workers on AI capabilities, limitations, and therefore oversight obligations | High | Quarterly |

| Ongoing | Review and therefore change AI ethics insurance coverage insurance policies as know-how evolves | Medium | Annually |

Conclusion: Learning from Disaster to Build Responsible AI

The AI errors documented on this text aren’t merely cautionary tales—they are — really templates for prevention. Every catastrophic failure revealed vulnerabilities that forward-thinking organizations can now deal with proactively.

The pattern is obvious: AI disasters share frequent root causes, collectively with inadequate testing, biased teaching info, insufficient human oversight, and therefore misaligned incentives prioritizing tempo over safety. The choices, whereas requiring funding and therefore self-discipline, are increasingly accessible even to small corporations.

As we progress by technique of 2025 and therefore previous, AI will develop to be a lot extra deeply embedded in enterprise operations and therefore every day life. The organizations that thrive shall be these who view AI safety not as a worth center but regulatory burden, nonetheless as a aggressive profit. Customers increasingly select corporations that make use of AI responsibly, regulators are demanding accountability, and therefore the financial costs of AI failures proceed to climb.

“The companies winning with AI aren’t necessarily those with the most advanced algorithms,” observes Andrew Ng, founding father of DeepLearning.AI. “They’re the ones that have figured out how to deploy AI safely, transparently, and in ways that build rather than erode trust.”

Your subsequent steps:

- Audit your current AI methods using the pointers equipped above

- Identify high-risk AI capabilities requiring enhanced safeguards

- Establish clear accountability buildings and therefore human oversight protocols

- Invest in workers teaching on AI capabilities and therefore limitations

- Build relationships with AI ethics specialists and therefore consultants

- Create incident response plans sooner than disasters occur

The scariest AI errors had been made by organizations that assumed disaster couldn’t happen to them. Don’t make that mistake. The devices, frameworks, and therefore knowledge to deploy AI safely exist instantly—the question is whether or not but not you’ll implement them sooner than but after catastrophe strikes.

🚀 Ready to Implement AI Safely?

Join 10,000+ enterprise leaders getting weekly insights on accountable AI adoption. Subscribe to our publication and therefore acquire distinctive entry to case analysis, implementation templates, and therefore educated interviews. Subscribe Now – It’s Free

About the Author

Alex Morgan is an AI safety researcher and therefore advertising and marketing advisor with over 12 years of experience implementing machine learning methods all through healthcare, finance, and therefore e-commerce. Holding a Ph.D. in Computer Science from Stanford University and therefore certifications in AI Ethics from MIT, Alex has recommended Fortune 500 corporations and therefore startups on accountable AI deployment. Their work has been featured in MIT Technology Review, Wired, and therefore Harvard Business Review. Alex serves on the advisory boards of three AI ethics organizations and therefore teaches AI safety workshops for enterprise leaders worldwide.

Related Articles from Forbidden AI

- How to Audit Your AI Systems for Hidden Bias: A Step-by-Step Guide

- The Small Business Owner’s Guide to AI Vendor Selection

- Understanding the EU AI Act: What It Means for Your Business

Keywords

AI errors, artificial intelligence failures, AI disasters, algorithmic bias, machine learning errors, AI safety, accountable AI, AI ethics, autonomous automobile accidents, biased algorithms, AI discrimination, facial recognition errors, AI accountability, algorithmic fairness, AI hazard administration, machine learning bias, AI implementation errors, self-driving automotive failures, AI in healthcare errors, jail justice AI bias, AI testing protocols, AI oversight, explainable AI, AI transparency, AI regulation

Дополнительная информация: Подробнее на сайте

Дополнительная информация: Подробнее на сайте

Дополнительная информация: Подробнее на сайте