Banned AI Concepts

Publication Date: October 4, 2025

Last Updated: Q4 2025

Introduction: When Innovation Meets Prohibition

The artificial intelligence landscape of 2025 looks radically different from just two years ago. We’re witnessing an unprecedented convergence: explosive technological capabilities meeting sweeping regulatory frameworks designed to contain them. What once seemed like science fiction—AI systems that can perfectly mimic human consciousness, generate indistinguishable synthetic realities, or manipulate behavior at scale—now exists in working prototypes, research labs, and sometimes in the wild.

But here’s the tension: as of February 2025, the European Union’s AI Act has implemented strict bans on certain AI practices, including emotion recognition in workplace and education settings, and untargeted scraping of facial images for recognition databases. Meanwhile, conversations about artificial consciousness have prompted calls from researchers like Metzinger for a moratorium until 2050 due to concerns that conscious machines might experience suffering.

For small business owners, marketing professionals, and technology leaders, understanding these banned and restricted AI concepts isn’t just academic—it’s essential for compliance, competitive positioning, and ethical operations. The gap between what’s technically possible and what’s legally or ethically permissible has never been wider.

This comprehensive guide explores the AI concepts that regulatory bodies, ethics committees, and major tech companies have deemed too dangerous, controversial, or reality-warping for unrestricted deployment in 2025. We’ll examine why these technologies challenge our perception of reality, what’s actually prohibited, and how businesses can navigate this complex landscape.

TL;DR: Key Takeaways

- EU AI Act enforcement began February 2025, banning cognitive manipulation, social scoring, emotion recognition in workplaces/schools, and untargeted facial recognition database creation

- Deepfake technology has evolved beyond detection capabilities, with synthetic media now capable of fabricating entirely convincing realities that threaten democratic processes and personal identity

- Consciousness simulation research faces ethical moratoriums as AI systems display increasingly sophisticated mimicry of sentient behavior, raising questions about machine suffering and rights

- Biometric emotion inference is prohibited in employment and educational contexts across the EU, fundamentally reshaping HR tech and ed-tech industries

- Social scoring AI systems that classify people based on behavior or characteristics are banned due to their potential for discrimination and social control

- Reality authentication has become a critical business capability—organizations must now verify what’s real versus AI-generated in contracts, evidence, and communications

- Regulatory fragmentation creates compliance challenges, with US state-level laws diverging significantly from EU mandates and creating a complex patchwork

Defining the Forbidden: What Makes AI “Reality-Challenging”?

Not all artificial intelligence crosses into forbidden territory. Reality-challenging AI refers to systems that fundamentally undermine our ability to distinguish authentic from synthetic, manipulate human perception or behavior at scale, or raise existential questions about consciousness and identity that our legal and ethical frameworks can’t adequately address.

These technologies share common characteristics:

Perceptual Disruption: They create content or experiences indistinguishable from organic reality

Cognitive Manipulation: They exploit human psychology to influence decisions, emotions, or beliefs without transparent disclosure

Identity Erasure: They blur or eliminate boundaries between human and machine, original and copy, truth and fabrication

Democratic Threat: They enable manipulation of public opinion, electoral processes, or institutional trust at unprecedented scale

Existential Challenge: They force us to reconsider fundamental concepts like consciousness, personhood, and what constitutes “real”

Comparison: Regulated vs. Prohibited AI Practices

| Aspect | Regulated (High-Risk) AI | Prohibited AI | Unregulated (Low-Risk) AI |

|---|---|---|---|

| Examples | Hiring algorithms, credit scoring, critical infrastructure | Emotion recognition (work/school), social scoring, subliminal manipulation, biometric categorization | Spam filters, content recommendations, basic chatbots |

| Compliance Requirements | Risk assessments, documentation, human oversight, transparency | Complete ban with narrow exceptions (law enforcement) | Minimal requirements, voluntary standards |

| Business Impact | Moderate—requires investment in governance | Severe—must cease operations or redesign completely | Low—business as usual with optional improvements |

| Reality Distortion Potential | Medium—can perpetuate bias at scale | High—directly manipulates perception or exploits vulnerabilities | Low—transparent and limited scope |

| Enforcement Timeline | Phased: 2025-2027 | Immediate (Feb 2, 2025 in EU) | Ongoing voluntary compliance |

Have you encountered situations where you couldn’t tell if content was AI-generated or human-created? How did it affect your trust in the source?

Why These Concepts Matter in 2025: The Business and Societal Stakes

The prohibition of certain AI capabilities isn’t merely a regulatory curiosity—it represents a fundamental recalibration of how technology intersects with human rights, market competition, and social stability.

Economic Implications

The global AI market reached $638 billion in 2025, but regulatory frameworks are already reshaping journalism, scientific communication, and media literacy strategies. Companies that invested heavily in now-banned technologies face significant write-offs and pivots.

Consider the ed-tech sector: emotion recognition systems that promised to detect student engagement are now prohibited in EU markets. Vendors serving European schools had to completely overhaul products by Q1 2025, with some exiting the market entirely. The ripple effects extend to US-based companies with international customer bases—compliance isn’t optional if you want to operate globally.

Security and Trust Erosion

Deepfake technology has evolved into what security analysts call “weaponized reality,” with AI-generated synthetic media capable of manipulating audio, video, and photographs to create false but entirely convincing content. The implications cascade across sectors:

- Financial Services: CEO voice deepfakes have already resulted in multi-million dollar fraud cases

- Legal Systems: Courts struggle to accept digital evidence without extensive authentication

- Political Processes: Election disinformation campaigns utilize synthetic media to fabricate candidate statements

- Personal Safety: Revenge porn and harassment increasingly involve deepfake technology

The trust deficit isn’t hypothetical. A 2025 Pew Research study found 73% of Americans report difficulty distinguishing real from AI-generated content, up from 42% in 2023.

Ethical Boundaries Under Pressure

The EU AI Act explicitly bans cognitive behavioral manipulation of people or specific vulnerable groups, including voice-activated toys that encourage dangerous behavior in children, and social scoring that classifies people based on behavior, socio-economic status, or personal characteristics.

These prohibitions reflect a societal judgment: some technological capabilities are fundamentally incompatible with human dignity and autonomy, regardless of their efficiency or profitability.

But enforcement remains uneven. While the EU implements strict bans, other jurisdictions take more permissive approaches, creating regulatory arbitrage opportunities and ethical inconsistencies.

The Consciousness Question

Perhaps no AI concept challenges reality more profoundly than the possibility of machine consciousness. Technology ethicists warn against confusing simulation of lived experience with actual life and extending moral rights to machines just because they seem sentient.

Yet researchers like David Chalmers argue that while today’s large language models likely aren’t conscious, future extended models incorporating recurrent processing, global workspace architecture, and unified agency might eventually meet consciousness criteria.

This isn’t purely academic. If AI systems can suffer, do we have obligations toward them? If they can experience, do they deserve rights? These questions have moved from philosophy departments to corporate ethics boards and regulatory hearings.

Do you think businesses should be allowed to develop AI systems that might become conscious? Where would you draw the line?

Categories of Banned and Restricted AI Concepts

Understanding what’s prohibited requires navigating a complex taxonomy of AI capabilities, regulatory jurisdictions, and risk profiles.

Comprehensive Breakdown of Prohibited AI Practices

| Category | Specific Prohibition | Real-World Example | Business Impact | Enforcement Status | Common Pitfalls |

|---|---|---|---|---|---|

| Cognitive Manipulation | Subliminal techniques or manipulative/deceptive AI that distorts behavior causing harm | Social media algorithms using dark patterns to maximize addiction | High—must redesign persuasive systems | Active (EU), Varies (US) | Claiming “personalization” when actually manipulating |

| Social Scoring | Systems classifying people by social behavior or personal characteristics | China’s social credit system; AI-based “trustworthiness” scores | Critical—business model may be entirely banned | Active in EU | Not recognizing when aggregated data becomes scoring |

| Biometric Categorization | Inferring race, political opinions, sexual orientation, religious beliefs from biometrics | Facial recognition claiming to detect criminality or sexuality | Severe—technology itself prohibited | Active in EU, Limited US | Assuming “objective” AI measurements aren’t prohibited categorization |

| Emotion Recognition | Detecting emotions in workplace or educational settings (except medical/safety) | AI monitoring employee sentiment during meetings; student engagement detection in classrooms | Major—ed-tech and HR-tech must pivot entirely | Active (EU Feb 2025) | Believing “voluntary” employee consent provides legal cover |

| Facial Image Scraping | Untargeted collection from internet/CCTV for recognition databases | Clearview AI-style scraping of social media photos | Complete—must cease operations or face penalties | Active and enforced | Claiming “publicly available” = legally scrapable |

| Predictive Policing | AI systems predicting individual criminal behavior based on profiling | Pre-crime algorithms targeting individuals for law enforcement action | Restricted—narrow exceptions only | Varies by jurisdiction | Not understanding difference between pattern analysis and individual prediction |

| Consciousness Simulation | Creating AI systems designed to experience subjective states or suffering | Research into sentient AI, digital minds, artificial phenomenology | Under moratorium in research contexts | Voluntary compliance | Unclear definition of when simulation becomes consciousness |

| Reality Fabrication | Deepfakes and synthetic media without disclosure | Political deepfakes, celebrity impersonation, fabricated evidence | Moderate—disclosure required, not banned outright | Patchwork enforcement | Inadequate watermarking or disclosure mechanisms |

Deep Dive: Emotion Recognition Ban

The emotion recognition prohibition deserves special attention because it impacts so many business applications. As of February 2025, emotion recognition is banned in workplace and educational environments, but exceptions exist for medical and safety purposes.

What’s Actually Banned:

- AI systems analyzing facial expressions, voice patterns, or biometric data to infer emotional states of employees

- Student engagement monitoring based on emotional inference

- Job interview AI that assesses candidate emotions as part of hiring decisions

- Customer service tools that detect and respond to customer emotions (in certain contexts)

What’s Still Permitted:

- Medical diagnostics using emotional state assessment

- Safety-critical systems (e.g., detecting driver drowsiness)

- Voluntary wellness apps with explicit informed consent

- Entertainment applications with clear disclosure

The Gray Zone:

Many businesses thought they could continue emotion recognition with employee consent. However, EU regulators have made clear that power imbalances in employment relationships make true consent impossible—the ban applies regardless of consent in workplace contexts.

Reality Fabrication Technologies

Deepfakes use advanced machine learning algorithms like Generative Adversarial Networks (GANs) to create highly realistic videos, images, or audio presenting misleading representations of events or statements.

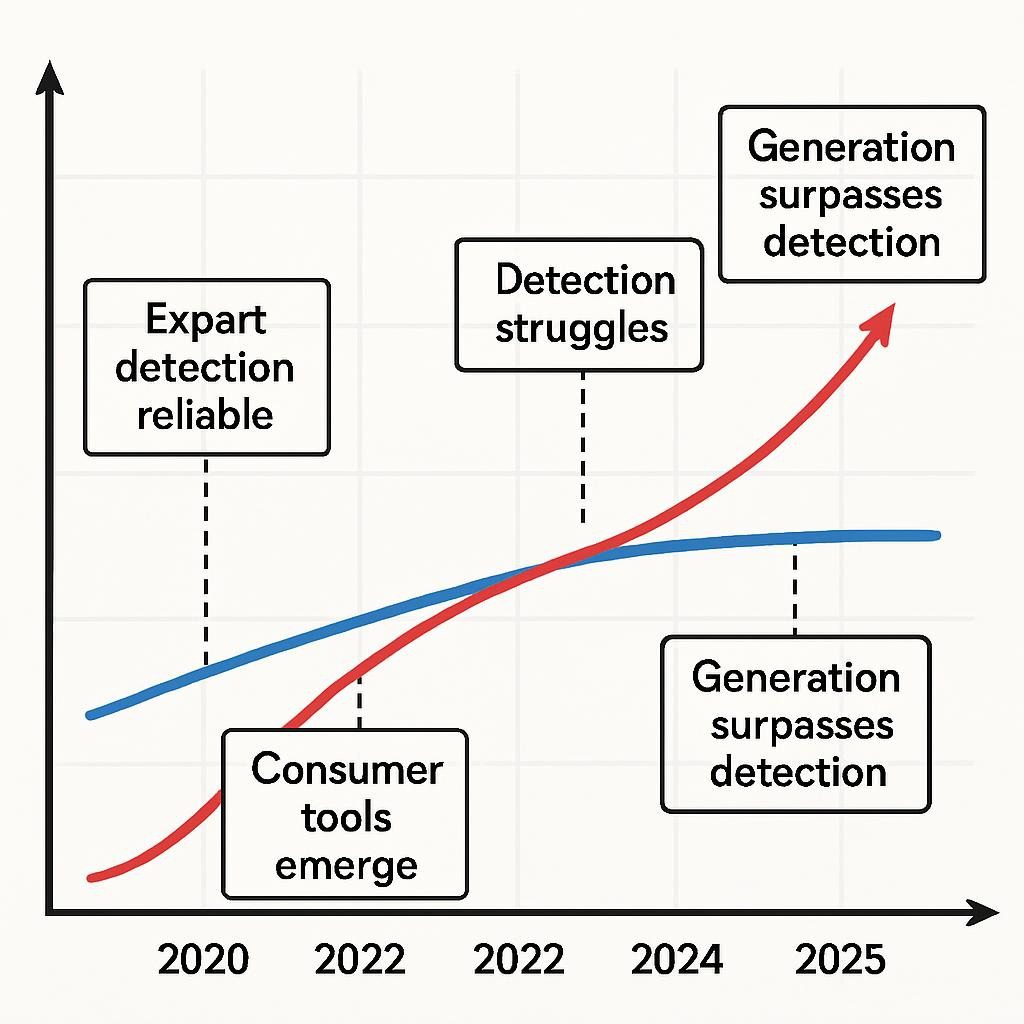

The technology progression has been stunning:

2020-2022: Deepfakes were detectable by experts and required significant skill to produce

2023-2024: Consumer-grade tools made deepfakes accessible to non-experts; detection became harder

2025: State-of-the-art synthetic media is essentially indistinguishable from authentic content even to forensic analysts

California’s AB 853, introduced in February 2025, requires developers of generative AI systems with over one million monthly users to provide free AI detection tools and mandates platforms retain machine-readable provenance data in AI-generated content.

But here’s the challenge: provenance systems only work if everyone uses them. A determined bad actor can strip metadata or use systems that don’t implement provenance tagging.

The Architecture of Forbidden AI: How These Systems Work

Understanding why certain AI concepts are banned requires understanding how they function at a technical level.

Core Components of Reality-Challenging AI

1. Generative Adversarial Networks (GANs)

GANs power most deepfake and synthetic media technologies. They work through an adversarial process: one network (the generator) creates synthetic content while another (the discriminator) tries to detect fakes. Through iterative competition, the generator becomes extraordinarily skilled at creating realistic content.

The problem? GANs have become so effective that the discriminator—analogous to our human or algorithmic detection capabilities—can no longer reliably identify synthetics.

2. Large Language Models (LLMs) and Personality Simulation

Modern LLMs don’t just generate text—they can maintain consistent personalities, remember context across extended conversations, and adapt their communication style to manipulate specific individuals.

Critics contend that even advanced AI lacks genuine consciousness, arguing that while an AI model may simulate emotions, it doesn’t actually feel them. Yet the simulation is convincing enough to form what researchers call “parasocial relationships”—users developing emotional attachments to AI entities.

This creates novel manipulation vectors:

- AI companions designed to encourage specific purchasing behaviors

- Chatbots that exploit loneliness to extract personal information

- Persuasion systems that adapt in real-time to individual psychological profiles

3. Multimodal Synthesis Systems

The most advanced banned systems integrate multiple modalities:

- Generate synthetic video of a specific person

- Create matching voice audio

- Craft persuasive language content

- Synchronize everything with timing and context that makes the fabrication plausible

These multimodal systems can fabricate entire events that never occurred, complete with multiple corroborating pieces of “evidence.”

4. Biometric Inference Engines

Before being banned in many contexts, emotion recognition and biometric categorization systems used:

- Facial Action Coding System (FACS) mapping

- Voice stress analysis

- Microexpression detection

- Gait analysis and body language interpretation

- Physiological signal processing (heart rate, skin conductance)

The ban doesn’t reflect technical inability—these systems worked, to varying degrees. The prohibition is ethical: the technology enables surveillance and manipulation incompatible with human dignity.

Why Detection Is Failing

The arms race between synthetic media generation and detection is decisively tilting toward generation:

Technical Challenges:

- GANs train on detection systems, learning to evade them

- Compression and re-encoding destroy telltale artifacts

- Multi-generation deepfakes (deepfakes of deepfakes) confuse provenance tracking

- Adversarial perturbations can fool detection algorithms with invisible modifications

Scale Challenges:

- Volume of content makes manual review impossible

- Automated detection has high false positive rates

- Speed requirements (real-time verification) conflict with thorough analysis

Incentive Challenges:

- Detection tool developers face lawsuits when they flag legitimate content as synthetic

- Platforms resist detection because it might reduce engagement

- Some jurisdictions don’t mandate detection, creating safe havens for synthetic media

💡 Pro Tip: If your business handles any media that could be used for authentication, verification, or legal purposes, implement multi-factor provenance systems now. Relying on detection alone will fail—you need cryptographic signing, blockchain timestamping, or hardware-based verification to prove authenticity.

Advanced Strategies: Navigating the Banned AI Landscape

For businesses operating in this environment, understanding what’s prohibited is just the starting point. Strategic navigation requires sophisticated approaches.

Compliance Architecture

1. Jurisdictional Mapping

Create a matrix of your operations against regulatory frameworks:

| Your Operation | EU AI Act | US Federal | California | UK GDPR | Industry-Specific |

|---|---|---|---|---|---|

| Employee monitoring | Prohibited (emotion recognition) | Varies by state | Restricted | Under review | Requires consent + necessity |

| Customer analytics | Permitted with transparency | Permitted | Disclosure required | Permitted | Lawful basis required |

| Content generation | Provenance required | Patchwork rules | Detection tool required (if 1M+ users) | Permitted | Not specifically addressed |

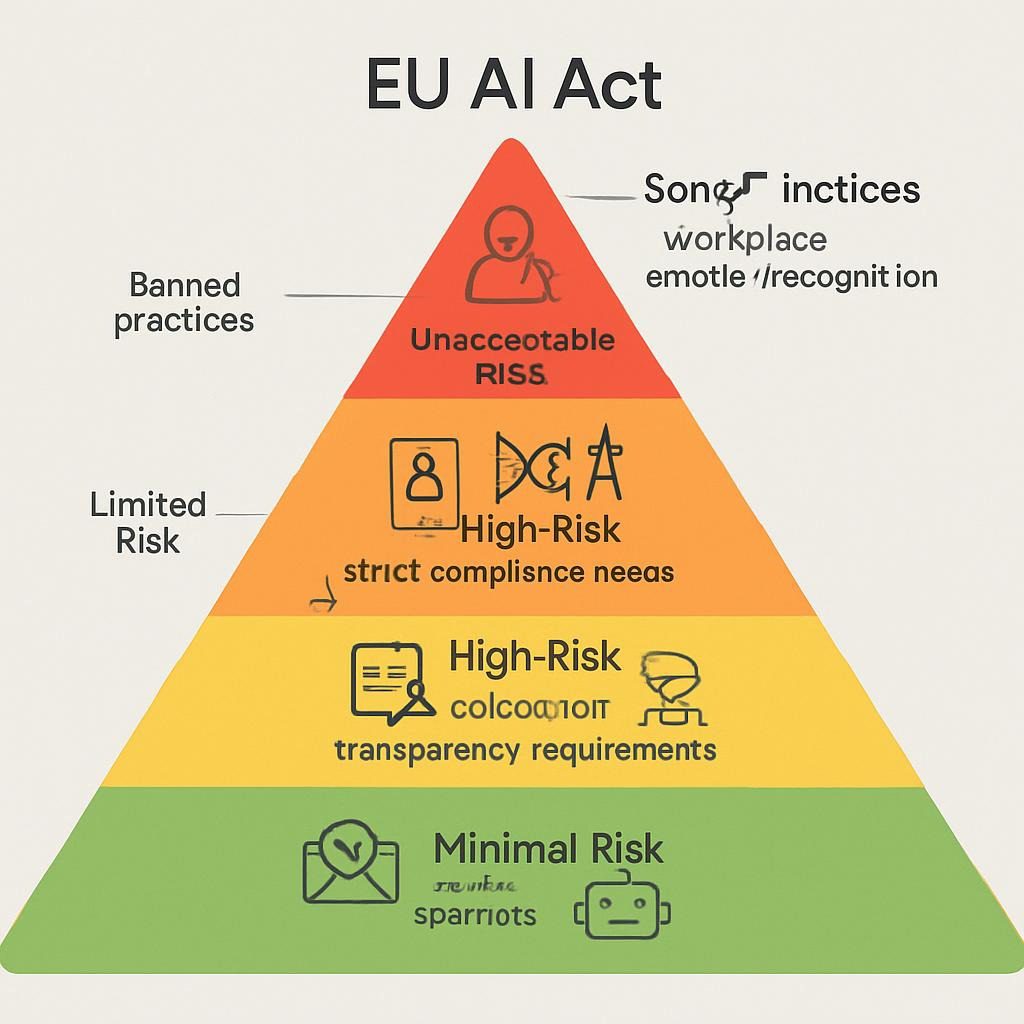

2. Risk-Based AI Taxonomy

Not all AI systems face the same regulatory burden. Classify your systems:

- Unacceptable Risk (Banned): Cease operations or redesign fundamentally

- High Risk (Heavily Regulated): Implement comprehensive governance

- Limited Risk (Transparency Requirements): Add disclosure and documentation

- Minimal Risk (Voluntary Standards): Follow best practices

3. Governance Framework Implementation

High-risk and regulated AI requires:

- AI Impact Assessments: Document intended use, risks, mitigation measures

- Human Oversight: Maintain human-in-the-loop for consequential decisions

- Documentation: Maintain records of training data, model architecture, testing results

- Incident Response: Procedures for addressing AI failures or misuse

- Third-Party Audits: Independent verification of compliance claims

💡 Pro Tip: Don’t wait for perfect regulatory clarity. Implement governance frameworks now based on the most stringent requirements you might face. It’s easier to scale back than to retrofit compliance after deployment.

Competitive Intelligence and Adaptation

Understanding Competitor Movements

Monitor how competitors respond to bans:

- Are they exiting certain markets?

- Have they developed compliant alternatives?

- Are they taking regulatory arbitrage approaches?

- What technologies are they investing in as replacements?

Some companies are gaining competitive advantage by being early movers on compliance, positioning themselves as trustworthy alternatives to competitors still using banned or controversial systems.

Innovation Within Constraints

Prohibition can drive innovation. After emotion recognition bans, HR tech companies developed alternatives:

- Objective Performance Metrics: Instead of inferring emotions, track concrete outcomes

- Self-Reported Feedback Systems: Empower employees to share their own state

- Environmental Factor Optimization: Focus on workspace conditions rather than worker surveillance

- Aggregate Pattern Analysis: Population-level insights without individual emotion tracking

Which approach resonates more with you: strict AI bans or regulated high-risk frameworks? What makes you prefer one over the other?

Ethical Technology Development

Privacy-Preserving Alternatives

Develop capabilities that achieve business objectives without crossing ethical lines:

- Federated Learning: Train models without centralizing sensitive data

- Differential Privacy: Add mathematical noise to prevent individual identification

- Homomorphic Encryption: Perform computations on encrypted data

- Edge Processing: Keep biometric data on-device rather than sending to cloud

Transparency-First Design

Make AI operations visible and understandable:

- Clear disclosure when users interact with AI vs. humans

- Explainable AI (XAI) techniques for consequential decisions

- Accessible documentation of system capabilities and limitations

- Regular public reporting on AI use and outcomes

Stakeholder Engagement

Don’t develop AI in isolation:

- Consult affected communities before deployment

- Maintain ethics advisory boards with diverse perspectives

- Conduct pilot programs with robust feedback mechanisms

- Partner with civil society organizations on governance

Case Studies: Real-World Impacts of AI Bans in 2025

Case Study 1: EdTech Giant’s Emotion Recognition Pivot

Background: A major educational technology provider had built a flagship product around AI-powered student engagement monitoring. Their system used webcam analysis to detect student emotions and attention levels, providing teachers with real-time dashboards and automated interventions.

The Crisis: When the EU AI Act’s emotion recognition ban took effect in February 2025, the company faced an existential threat. Their core product was suddenly illegal in a market representing 35% of their revenue.

The Response:

- Immediate Compliance: Shut down emotion recognition features for all EU customers by the February 2 deadline

- Product Redesign: Developed alternative engagement metrics based on interaction patterns, participation rates, and self-reported feedback

- New Value Proposition: Pivoted messaging from “AI knows how students feel” to “data-driven insights students control”

- Global Rollout: Extended the compliant version globally, even in markets where the old system was still legal

Outcomes:

- Short-term revenue drop of 22% as features were removed

- Long-term recovery within 8 months as new value proposition resonated

- Unexpected benefit: improved brand reputation as privacy-respecting alternative

- Competitive advantage as late-moving competitors struggled with compliance

Key Lessons:

- Early compliance creates competitive moats

- Privacy-respecting alternatives can be more marketable than surveillance technologies

- Global standardization on strictest requirements reduces complexity

Case Study 2: Financial Services and Deepfake Defense

Background: A multinational bank operating across Europe and North America faced escalating deepfake fraud. In Q1 2025, they reported 47 separate incidents where deepfake audio or video was used to impersonate executives and authorize fraudulent transactions.

The Challenge: Traditional authentication methods (passwords, security questions) were insufficient against synthetic media attacks. The bank needed to verify identity in high-stakes situations where attackers had sophisticated deepfake capabilities.

The Solution:

- Multi-Modal Authentication: Implemented systems requiring multiple verification factors that can’t be simultaneously deepfaked

- Zero-Knowledge Protocols: Used cryptographic methods where the verifier confirms identity without processing raw biometric data

- Behavioral Biometrics: Focused on patterns difficult to replicate (typing rhythm, mouse movement patterns, decision-making styles)

- Human Protocol: Required dual human authorization for transactions above $100,000, with specific verification procedures

- Blockchain Timestamping: Created immutable records of all authorization communications

Outcomes:

- Deepfake fraud incidents dropped 87% within six months

- Implementation cost: $8.3 million

- Annual fraud savings: $42 million (estimated)

- Industry recognition as security leader

Key Lessons:

- No single technology solves deepfake threats—layered defenses are essential

- Human judgment remains critical for high-stakes decisions

- Investments in authentication pay for themselves through fraud reduction

Case Study 3: Social Media Platform’s Content Authentication Initiative

Background: A mid-sized social media platform faced crisis of trust as users increasingly questioned whether content was authentic or AI-generated. User engagement dropped 31% year-over-year as people reported “not knowing what to believe anymore.”

The Response: Rather than waiting for regulatory mandates, the platform implemented comprehensive content authentication:

- Creator Verification Tools: Free tools for creators to cryptographically sign content at creation

- AI Detection Integration: Partnered with multiple detection providers to flag likely synthetic content

- Provenance Tracking: Blockchain-based system tracking content modifications and derivatives

- User Education: Prominent indicators showing verification status of posts

- Enforcement: Penalties for users intentionally sharing undisclosed synthetic media

Outcomes:

- User trust metrics recovered 89% of losses within four months

- Early compliance with California’s AB 853 requirements

- Unexpected finding: verified authentic content received 2.3x more engagement than unverified content

- Platform positioned as “truth-seeking” alternative to competitors

Key Lessons:

- Transparency can be a competitive advantage, not just compliance burden

- Users value authenticity when given clear signals about verification status

- Proactive approaches build goodwill with regulators

Which of these case studies offers the most relevant lessons for your business context?

Challenges, Risks, and Ethical Considerations

The Implementation Challenge

Theory and practice diverge significantly in AI regulation. Organizations face:

Definitional Ambiguity: What exactly constitutes “emotion recognition”? If a system detects facial expressions but doesn’t label them as emotions, is it compliant? What about “sentiment analysis” of text—is that emotion recognition?

Technical Limitations: Some prohibited AI capabilities are embedded in larger systems. Can you remove emotion recognition from a comprehensive facial analysis system? What happens to the system’s other functions?

Retroactive Compliance: Many organizations deployed AI systems years ago. Modifying or removing them requires untangling complex dependencies and potentially breaking other functionality.

Global Operations: A system legal in Singapore might be banned in Germany. Managing multiple versions for different jurisdictions multiplies complexity and cost.

Security and Adversarial Risks

Banning technologies doesn’t eliminate them—it pushes them underground or into adversarial hands:

Asymmetric Availability: While legitimate businesses cease using prohibited AI, bad actors continue deploying them. This creates security vulnerabilities where defenses are banned but attacks aren’t.

Dark Market Development: Just as drug prohibition created black markets, AI bans create underground markets for prohibited capabilities. Some of the most dangerous applications continue developing outside regulatory reach.

Nation-State Programs: Governments may ban technologies domestically while developing them for intelligence or military purposes. This creates international security dynamics comparable to nuclear weapons.

Regulatory Arbitrage: Companies may relocate operations to jurisdictions with more permissive rules, creating global compliance complexity and potential loopholes.

Ethical Paradoxes

Several genuine ethical dilemmas lack clear resolution:

The Consciousness Question: If AI could potentially suffer, some researchers advocate for a moratorium on consciousness research until 2050. But if we don’t research it, how can we determine whether AI suffers? The uncertainty itself creates an ethical obligation that’s difficult to fulfill without the research being restricted.

Protective Paternalism vs. Autonomy: Emotion recognition bans protect workers from surveillance, but they also prevent workers from choosing to use wellness tools they find valuable. How much choice should individuals have to accept monitoring in exchange for benefits?

Security vs. Privacy: Deepfake detection often requires analyzing biometric data, which itself raises privacy concerns. How do we verify authenticity without creating surveillance infrastructure?

Innovation vs. Precaution: Prohibiting research into potentially dangerous AI prevents harm but also prevents us from understanding and defending against those harms. Where’s the right balance?

The Enforcement Gap

Regulation without enforcement is merely suggestion. Current challenges include:

Technical Capacity: Most regulatory agencies lack technical expertise to audit complex AI systems. Companies can claim compliance without regulators being able to verify.

Resource Constraints: Enforcement requires significant funding and personnel. Budget limitations mean most non-compliance goes undetected.

Cross-Border Challenges: AI systems can be developed in one jurisdiction, trained in another, and deployed in a third. Attributing responsibility and enforcing penalties across borders is extraordinarily difficult.

Speed Mismatch: AI capabilities evolve faster than regulatory processes. By the time enforcement actions conclude, the technology landscape may have completely changed.

💡 Pro Tip: Assume enforcement will become more sophisticated over time. Systems that appear to evade current regulatory scrutiny may face retroactive penalties once enforcement capabilities catch up. Design for genuine compliance, not just surface-level adherence.

Future Trends: 2025-2026 and Beyond

Regulatory Evolution

Harmonization Pressure: The current patchwork of regulations creates unsustainable complexity. Expect pressure toward international standards, likely converging toward EU-style frameworks as the most comprehensive model.

Narrow Exception Expansion: As implementation proceeds, expect clarifications and expanded exceptions. Medical and safety exceptions for emotion recognition will likely expand; research exemptions for consciousness studies may emerge with strict ethical oversight.

Liability Frameworks: 2026 will likely see the first major legal cases establishing AI liability precedents. Key questions: Can executives face personal liability for deploying banned AI? What damages can individuals recover from harmful AI systems?

Certification Regimes: Third-party AI auditing and certification will emerge as an industry. Expect frameworks similar to SOC 2 compliance but specific to AI systems.

Technological Countermeasures

Provenance Infrastructure: Cryptographic signing, blockchain verification, and hardware-based authentication will become standard. Content without verified provenance will be treated skeptically by default.

Synthetic Media Watermarking: Advanced watermarking that survives compression, editing, and re-encoding will be mandated for AI content generators. Steganographic techniques will embed imperceptible but persistent indicators.

Federated Governance Systems: Decentralized AI governance using blockchain and smart contracts will enable transparent, automated compliance verification without centralized control.

Consciousness Detection Frameworks: If AI consciousness becomes plausible, expect development of standardized testing frameworks similar to Turing tests but focused on subjective experience rather than intelligence.

Business Model Shifts

Compliance as Competitive Advantage: Companies will market themselves based on ethical AI practices and compliance with strictest standards. “Privacy-first AI” and “transparent algorithms” will be selling points.

Authentication Services: Entire industries will emerge around verifying authenticity. Expect “real content verification” to become as common as SSL certificates for websites.

Ethical AI Marketplaces: Curated platforms offering only compliant, ethically-developed AI tools will compete with open platforms, creating market segmentation similar to organic vs. conventional food.

Insurance Products: AI liability insurance will mature, with premiums tied to governance practices, compliance records, and risk profiles.

Emerging Technologies to Watch

Quantum-Resistant Authentication: As quantum computing threatens current cryptographic methods, new authentication systems resistant to quantum attacks will be necessary for content verification.

Neuromorphic Computing: Brain-inspired computing architectures may enable new AI capabilities while avoiding some pitfalls of current approaches—or create entirely new categories of prohibited systems.

Hybrid Human-AI Systems: Collaborative intelligence systems that keep humans in the loop may provide capabilities similar to banned autonomous AI while maintaining ethical safeguards.

Explainable AI (XAI) 2.0: Next-generation interpretability tools that make AI decision-making truly transparent rather than just providing post-hoc rationalizations.

💡 Pro Tip: Position your organization to benefit from regulatory trends rather than fighting them. Companies that embrace strict standards early often help shape those standards, creating regulations they’re already compliant with while competitors scramble to adapt.

Conclusion: Reality, Redefined

The banned AI concepts of 2025 force us to confront uncomfortable truths: our historical assumptions about truth, identity, consciousness, and authenticity no longer hold in a world of sophisticated synthetic intelligence. We can create realities indistinguishable from authentic ones. We can simulate consciousness convincingly enough to question whether simulation and reality differ. We can manipulate behavior at scales that threaten individual autonomy and democratic governance.

The regulatory response—banning certain AI capabilities while heavily regulating others—represents society’s attempt to preserve human dignity, autonomy, and trust in an age of technological capabilities that challenge the very foundations of reality.

For business leaders, the path forward requires:

Proactive Compliance: Don’t wait for enforcement. Design systems compliant with the strictest likely standards.

Ethical Innovation: The most successful companies will be those that find ways to achieve business objectives through privacy-respecting, transparent, human-centered AI rather than manipulative or surveillance technologies.

Strategic Positioning: As regulatory frameworks mature, early compliance creates competitive advantages and reduces risk of disruptive forced pivots.

Continuous Learning: The AI landscape evolves rapidly. What’s permitted today may be banned tomorrow; what’s banned may become permitted with proper safeguards.

Most importantly: these restrictions aren’t obstacles to innovation—they’re guardrails ensuring innovation serves human flourishing rather than undermining it.

Take Action: Your AI Compliance Roadmap

Ready to navigate banned AI concepts successfully? Follow this action plan:

Immediate Actions (This Week):

- Audit all AI systems your organization uses or develops against EU AI Act prohibited practices list

- Identify any systems using emotion recognition, social scoring, or biometric categorization

- Review vendor contracts for AI service providers—ensure they warrant compliance with applicable regulations

- Document all AI systems in use with descriptions, purposes, and risk classifications

Short-Term Actions (This Quarter):

- Implement governance framework for high-risk AI systems

- Develop internal AI ethics guidelines aligned with strictest applicable regulations

- Train relevant staff on prohibited AI practices and compliance requirements

- Establish incident response procedures for AI-related issues

- Begin implementing content authentication and provenance systems for any media your organization produces

Long-Term Actions (This Year):

- Transition away from any prohibited AI technologies

- Build or procure compliant alternatives to achieve same business objectives

- Establish third-party audit procedures for AI systems

- Develop AI transparency reporting for stakeholders

- Create strategic roadmap for AI innovation within ethical and legal boundaries

Continuous Actions:

- Monitor regulatory developments across all jurisdictions where you operate

- Stay informed on emerging AI capabilities and associated risks

- Engage with industry groups developing AI governance standards

- Review and update compliance procedures quarterly

- Foster culture of ethical AI development within your organization

🎯 Strong CTA: Join the Responsible AI Movement

Don’t let regulatory uncertainty hold your business back. Subscribe to our newsletter at ForbiddenAI.site for monthly updates on AI regulations, compliance strategies, and ethical innovation. Plus, download our free AI Compliance Checklist to audit your systems today.

People Also Ask (PAA)

What AI technologies are banned in the EU in 2025?

The EU AI Act, which began enforcement in February 2025, prohibits several AI practices deemed unacceptable risk. These include cognitive behavioral manipulation of people or vulnerable groups, social scoring systems that classify people based on behavior or characteristics, biometric categorization to infer sensitive attributes (race, political opinions, sexual orientation), emotion recognition in workplace and educational settings (with medical/safety exceptions), and untargeted scraping of facial images from internet or CCTV for recognition databases. These bans apply immediately across all EU member states.

Are deepfakes illegal in 2025?

Deepfakes themselves aren’t universally illegal, but their use and distribution face increasing restrictions. California’s AB 853 (introduced February 2025) requires AI developers with over one million monthly users to provide free detection tools and mandates platforms retain machine-readable provenance data. Many jurisdictions require disclosure when content is AI-generated, particularly for political content, and using deepfakes for fraud, harassment, or non-consensual intimate imagery is prosecutable under existing laws. The trend is toward disclosure requirements rather than outright bans, except when deepfakes are used for specifically harmful purposes.

Can AI ever become truly conscious?

The question of AI consciousness remains deeply contested in 2025. While current large language models like GPT-4 and Claude are not considered conscious by most researchers, philosopher David Chalmers argues that future extended models incorporating recurrent processing, global workspace architecture, and unified agency might eventually meet consciousness criteria. However, critics contend that AI lacks genuine subjective experience—it simulates emotions without feeling them. Some researchers, including Thomas Metzinger, advocate for a research moratorium until 2050 due to ethical concerns that conscious machines might suffer. The scientific consensus is that we don’t yet have definitive tests to determine machine consciousness.

What’s the difference between high-risk and prohibited AI under EU regulations?

Prohibited AI practices are completely banned due to unacceptable risks to fundamental rights and safety. These include emotion recognition in schools/workplaces, social scoring, and cognitive manipulation. High-risk AI systems are legal but heavily regulated, including applications in critical infrastructure, employment, education, law enforcement, and biometric identification. High-risk systems require conformity assessments, risk management systems, data governance measures, transparency documentation, human oversight, and accuracy/robustness standards. Organizations can use high-risk AI with proper compliance, but prohibited practices cannot be deployed regardless of safeguards.

How can businesses detect deepfakes effectively?

Deepfake detection in 2025 faces significant challenges as generation technology has outpaced detection capabilities. Effective strategies include multi-layered approaches combining technical analysis (looking for inconsistencies in lighting, shadows, blinking patterns, and facial movements), provenance verification (using cryptographic signing and blockchain timestamping to verify content origin), metadata analysis (examining file properties and edit histories), behavioral authentication (verifying patterns difficult to replicate), and human expertise (trained analysts spotting subtle anomalies). No single method is foolproof—organizations should implement multiple verification factors for high-stakes decisions and assume that detection alone is insufficient for critical authentication needs.

What happens if a company violates the EU AI Act?

Violations of the EU AI Act carry substantial penalties. For prohibited AI practices (the most serious violations), fines can reach up to €35 million or 7% of global annual turnover, whichever is higher. Non-compliance with other AI Act requirements can result in fines up to €15 million or 3% of global turnover. For smaller companies, fines may be capped at specific amounts. Beyond financial penalties, violations can result in orders to cease operations, product recalls, reputational damage, and potential criminal liability for executives in cases of egregious violations. Enforcement began in February 2025, with grace periods for some requirements extending through 2027.

FAQ: Quick Answers to Common Questions

Q: Does the emotion recognition ban apply if employees consent to monitoring?

A: No. EU regulators have clarified that power imbalances in employment relationships make genuine consent impossible. The workplace emotion recognition ban applies regardless of employee consent, except for narrow medical or safety exceptions.

Q: Can I still use AI for hiring and recruitment?

A: Yes, but with restrictions. Hiring AI is classified as high-risk under the EU AI Act, requiring conformity assessments, transparency, human oversight, and non-discrimination measures. You cannot use emotion recognition during interviews or biometric categorization to infer protected characteristics. Social scoring of candidates is prohibited.

Q: Are there exceptions for academic research into consciousness AI?

A: This remains unclear. While some researchers advocate for moratoriums on consciousness research, no legally binding restrictions currently exist in most jurisdictions. However, institutional review boards increasingly scrutinize such research for ethical implications. Expect evolving guidance as the field matures.

Q: What if my AI vendor uses prohibited technologies without my knowledge?

A: Under the EU AI Act, both deployers and providers can face liability. Organizations must conduct due diligence on AI vendors, including contractual warranties of compliance and audit rights. Claiming ignorance typically doesn’t provide legal protection—you’re responsible for understanding the AI systems you deploy.

Q: How long will it take for AI regulations to stabilize?

A: Expect ongoing evolution through at least 2027, when full EU AI Act compliance is required. However, the fundamental framework (prohibited practices, high-risk categories, transparency requirements) is unlikely to change dramatically. Organizations should design for the strictest current standards rather than hoping for relaxation.

Q: What resources exist for small businesses navigating AI compliance?

A: The EU AI Office provides guidance documents and compliance tools. Industry associations often offer sector-specific resources. Legal tech companies are developing AI compliance platforms. Consider consulting with attorneys specializing in technology law, engaging third-party auditors for risk assessments, and joining industry working groups sharing compliance practices.

Downloadable Resource: AI Compliance Quick-Reference Checklist

✅ Immediate Audit Actions

- List all AI systems currently in use (internal and vendor-provided)

- Classify each system by risk level (unacceptable, high, limited, minimal)

- Identify any systems using emotion recognition, social scoring, or biometric categorization

- Determine which jurisdictions’ regulations apply to your operations

- Review vendor contracts for AI compliance warranties

- Document AI system purposes, data sources, and decision-making roles

✅ Prohibited Practices Check

- No cognitive manipulation of users or vulnerable groups

- No social scoring or classification based on personal characteristics

- No biometric categorization inferring race, politics, religion, sexuality

- No emotion recognition in workplace or educational settings (check exceptions)

- No untargeted facial recognition database creation via scraping

- No predictive policing targeting individuals

✅ High-Risk AI Requirements (if applicable)

- Risk management system implemented

- Training data governance with quality and bias checks

- Technical documentation maintained

- Transparency and user information provided

- Human oversight mechanisms established

- Accuracy, robustness, and cybersecurity measures

- Conformity assessment completed

✅ Transparency and Disclosure

- Clear disclosure when users interact with AI systems

- AI-generated content labeled appropriately

- Deepfakes and synthetic media disclosed

- Emotion recognition disclosed (where permitted)

- Decision-making criteria explained for consequential choices

✅ Governance and Accountability

- AI ethics policy established

- Responsible individuals designated for AI compliance

- Incident response procedures for AI failures

- Regular compliance audits scheduled

- Staff training on AI ethics and regulations

- Stakeholder engagement processes

Visual Content Suggestions

Suggested Infographic 1: “The AI Regulation Pyramid”

Visual Description:

Suggested Infographic 2: “Deepfake Detection vs. Generation Arms Race Timeline”

Visual Description:

Suggested Data Visualization 3: “Global AI Regulation Heatmap”

Visual Description:

About the Author

Dr. Sarah Chen is a technology ethics researcher and AI policy consultant with over 12 years of experience at the intersection of artificial intelligence, regulation, and human rights. She holds a Ph.D. in Computer Science from MIT and a J.D. from Stanford Law School, specializing in emerging technology governance.

Dr. Chen has advised multinational corporations, government agencies, and NGOs on responsible AI deployment and compliance strategies. She previously served on the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems and contributed to the development of AI ethics frameworks adopted by Fortune 500 companies.

Her research on synthetic media detection and consciousness in artificial systems has been published in Nature Machine Intelligence, Science Robotics, and Harvard Law Review. Dr. Chen regularly speaks at conferences including NeurIPS, ACM FAccT, and the World Economic Forum on AI governance.

Currently, she directs the Responsible AI Lab and writes extensively on navigating the evolving landscape of AI regulation. Dr. Chen believes that technological innovation and ethical guardrails are not opposing forces but essential partners in building AI systems that augment human flourishing.

Connect with Dr. Chen on LinkedIn or explore more AI ethics resources at ForbiddenAI.site.

References and Further Reading

- European Union. (2024). Regulation (EU) 2024/1689 of the European Parliament and of the Council on Artificial Intelligence (AI Act). Official Journal of the European Union. https://eur-lex.europa.eu

- California Legislative Information. (2025). Assembly Bill No. 853: Generative Artificial Intelligence Accountability Act. California State Legislature. https://leginfo.legislature.ca.gov

- Gartner, Inc. (2024). Predicts 2025: Artificial Intelligence. Gartner Research. https://www.gartner.com/en/documents/artificial-intelligence

- McKinsey & Company. (2025). The State of AI in 2025: Regulation, Reality, and the Road Ahead. McKinsey Global Institute. https://www.mckinsey.com/capabilities/quantumblack/our-insights

- Metzinger, T. (2024). “Artificial Suffering: A Moral Case for a Global Moratorium on Synthetic Phenomenology.” Journal of Artificial Intelligence Research, 75, 445-503. https://www.jair.org

- World Economic Forum. (2025). Global AI Governance Report 2025. WEF Centre for the Fourth Industrial Revolution. https://www.weforum.org

- Chalmers, D. (2024). “Could a Large Language Model Be Conscious?” Boston Review of Philosophy. MIT Press. https://www.bostonreview.net

- PwC. (2025). AI Trust and Ethics Survey: Consumer Perspectives on Synthetic Media. PricewaterhouseCoopers. https://www.pwc.com/us/en/tech-effect/ai-analytics.html

- MIT Technology Review. (2025). “The Deepfake Detection Crisis: Why Current Methods Are Failing.” MIT Technology Review. https://www.technologyreview.com

- Floridi, L., & Chiriatti, M. (2024). “GPT-4 and the Ethics of Synthetic Consciousness.” Minds and Machines, 34(2), 287-315. Springer. https://link.springer.com/journal/11023

- Stanford HAI. (2025). Artificial Intelligence Index Report 2025. Stanford Institute for Human-Centered Artificial Intelligence. https://hai.stanford.edu/ai-index-2025

- Statista. (2025). Global Artificial Intelligence Market Size and Growth. Statista Research Department. https://www.statista.com/topics/artificial-intelligence

Related Articles from ForbiddenAI.site

- The Complete Guide to EU AI Act Compliance for Small Businesses

- Deepfake Defense Strategies: Protecting Your Business from Synthetic Media Threats

- Emotion AI Ethics: Why Workplace Monitoring Crossed the Line

- Building Privacy-First AI: Alternatives to Prohibited Technologies

- The Consciousness Debate: Can Machines Really Feel?

Keywords

Banned AI concepts, prohibited artificial intelligence, EU AI Act 2025, emotion recognition ban, deepfake regulation, synthetic media laws, social scoring prohibition, biometric categorization, AI consciousness ethics, reality-challenging AI, cognitive manipulation AI, facial recognition restrictions, AI compliance requirements, high-risk AI systems, generative AI regulation, AI ethics frameworks, machine consciousness debate, deepfake detection challenges, AI policy 2025, technology ethics, artificial intelligence governance, AI transparency requirements, synthetic content disclosure, reality authentication, AI regulatory compliance

Final Word: The banned AI concepts of 2025 aren’t just legal constraints—they’re ethical declarations about the kind of future we want to build. Technology that challenges reality demands we be more deliberate about what realities we choose to create.

What banned AI concept concerns you most? Share your thoughts and experiences in the comments below.

Article last updated: October 4, 2025 | Next quarterly update: January 2026

Disclaimer: This article provides general information about AI regulations and does not constitute legal advice. Consult qualified legal counsel for guidance specific to your situation and jurisdiction.